xvisor

協作者

- 2015 年春季

- 沈宗穎, 李育丞, 蘇誌航, 張仁傑, 鄧維岱

Hackpad

虛擬化技術 (Virtualization)

虛擬化就是要建立出一個作業系統或伺服器的虛擬版本,可以把擁有的資源作妥當的管理分配

Hypervisor (Virtual Machine Monitor, VMM)

Hypervisor的主要功用是去管理Virtual Machines (VMs),使VM能夠各自獨立運行,同時hypervisor也管理著底層硬體

- Host Machine: 運行Hypervisor的實體主機,但有時運行在Hypervisor之上

- Guest Machine: 運行在Hypervisor之上的虛擬主機

目前三大open source的hypervisor為Linux KVM, Xen, Xvisor,可閱讀此資料

使用時機及益處

- 工作負載整合 (Workload Consolidation)

- 許多舊有的系統或程式在各自的硬體上,我們可以透過hypervisor的分配,共同使用硬體資源

- 以VMware資料顯示,如果透過VMware vSphere來進行伺服器的虛擬化,可以降低硬體和營運成本達 50%,並減少能源成本達 80%,且每完成一個虛擬化伺服器工作負載,每年可節省的成本超過 $3,000 美元。最多可減少 70% 佈建新伺服器所需的時間。source: VMware

- EX:成大電機系電腦教室

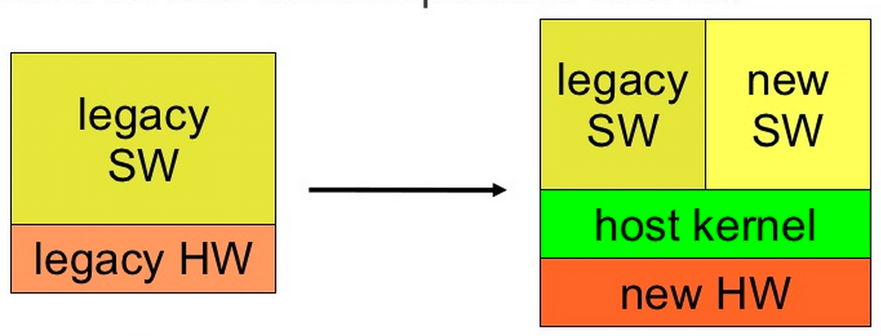

- 支援舊有軟體 (Legacy Software)

- 老舊過時的軟硬體透過虛擬化移植到新的硬體或OS上

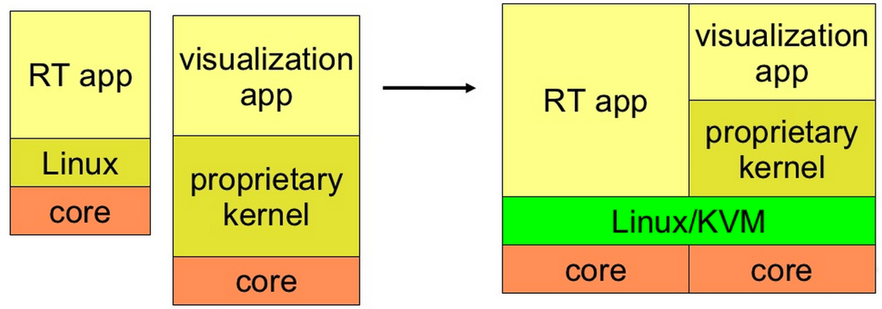

- APP運行於不同kernel上,可透過KVM (Kernel-based Virtual Machine)整合並切換kernel來運行app,為Bare-Metal概念

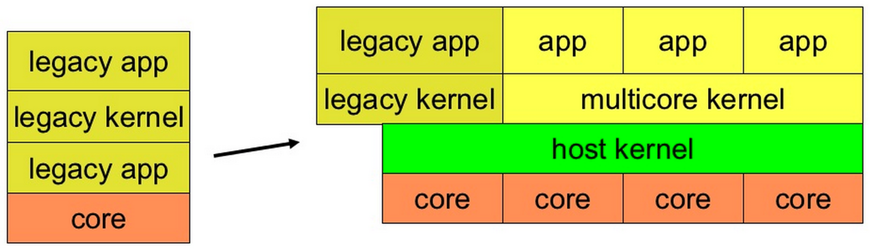

- 啟用多核 (Multicore Enablement)

- 原本舊有的APP及kernel運行在單一core上,透過虛擬化,我們可以移植到有多個core的架構,並且透過host kernel去運行

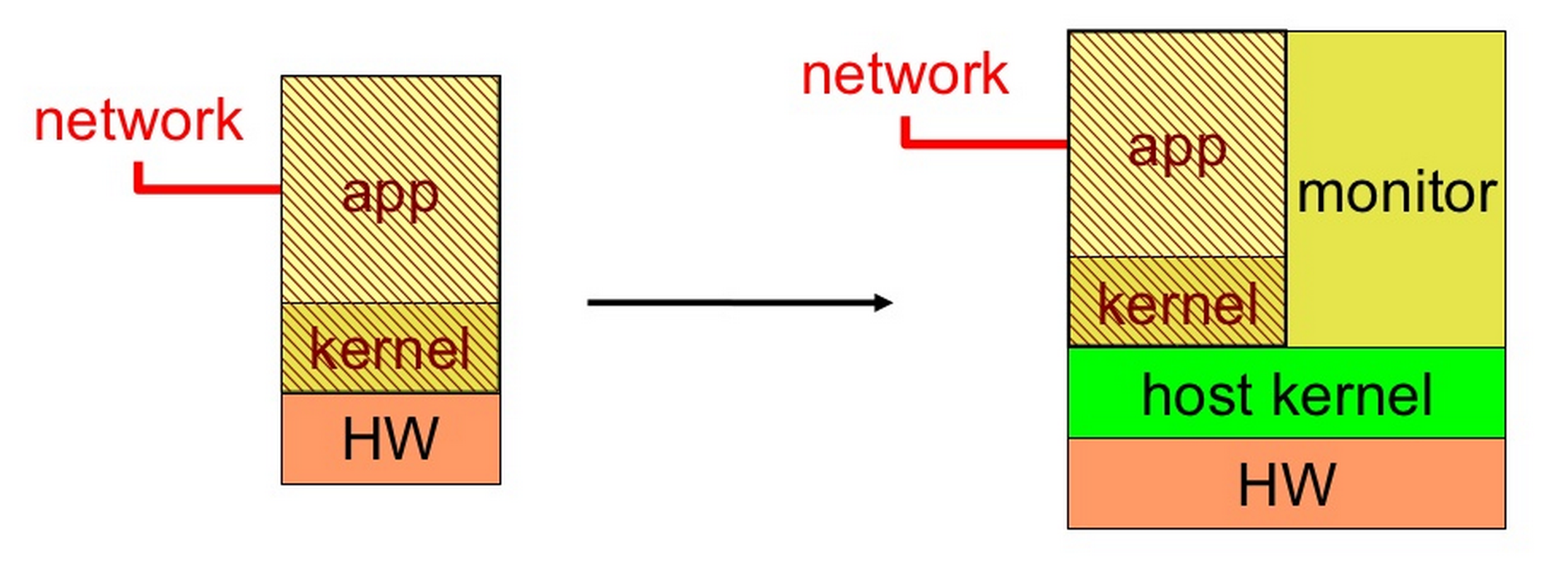

- 提高可靠性 (Improved Reliability)

- 因有host kernel,我們不需要太多的硬體

- 安全監控 (Secure Monitoring)

- ARM SMC(secure monitor call) to secure monitor mode

- kernel等級或者是rootkits的攻擊通常都是在執行擁有特權(privilege)模式的時候發生的,而透過虛擬化,我們擁有更高權限的hypervisor去控制memory protection或程式的排程優先順序

- 最主要我們是透過hypervisor去把security tools也就是monitor孤立出來,不會把monitor與untrusted VM放在一起,並把他們分別移到trusted secure VM,再透過 introspection 去掃描或觀察這些程式碼

- source: http://research.microsoft.com/pubs/153179/sim-ccs09.pdf (主要探討Secure in VM-monitor)

- source: https://www.ma.rhul.ac.uk/static/techrep/2011/RHUL-MA-2011-09.pdf

- In-VM 有較好的performance,Out-of-VM則有較好的安全性

- 工作負載整合 (Workload Consolidation)

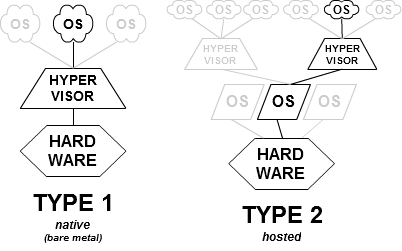

Hypervisor的類型

- Type-1: 本地(native)、裸機(bare-metal) hypervisors

- Type-2: 託管(hosted) hypervisors

- Hypervisor運行在host的作業系統上,再去提供虛擬化服務

- 如: VMware、VirtualBox

- Hypervisor運行在host的作業系統上,再去提供虛擬化服務

- Type-1 與 Type-2 比較

- Type-1

- 能直接與硬體溝通,有較高的安全性(掌握在hypervisor上),適合server虛擬化

- 但因直接運行於硬體上,所以時常為single purpose

- Type-1又有分兩種設計

- monolithic

- device driver包含在hypervisor(也就是kernel

space),因為沒有任何的intermediation,所以application及hardware間有良好的performance,但hypervisor會有大量的driver

code,容易被攻擊

- Complete monolithic

- 此hypervisor有個software負責host的硬體存取、CPU的虛擬化及guest的IO emulation,Xvisor屬於此類

- Partially monolithic

- hypervisor為GPOS的附加套件(extension),與complete monothlic不同的是,是利用user-space software,例如QEMU來支持host的硬體存取、CPU的虛擬化及guest的IO emulation,KVM屬於此類

- Complete monolithic

- device driver包含在hypervisor(也就是kernel

space),因為沒有任何的intermediation,所以application及hardware間有良好的performance,但hypervisor會有大量的driver

code,容易被攻擊

- microkernel

- hypervisor為輕小的micro-kernel,利用Management Guest(例如Xen的Dom0)去提供host的硬體存取、CPU的虛擬化及guest的IO emulation

- device driver被安裝在parent guest(也就是user space). Parent guest是privileged VM,去管理non-privileged child guest VMs. 當Child guest要存取硬體資源時,必須經過parent guest,雖然performance下降,但較monolithic安全,Xen則屬於此類

- monolithic

- Type-2

- 支援較多的I/O device及服務

- 因需多透過Host OS,效能較Type-1低,常應用於效率較不重要的客戶端

- Type-1

Source: https://www.ma.rhul.ac.uk/static/techrep/2011/RHUL-MA-2011-09.pdf

- (補充)Type-0

- 由於Type-1及Type-2過於複雜也不易在嵌入式系統作設定,因此美國軟體公司LynuxWorks在2012年提出Type-0 Hypervisor,此Hypervisor不需要kernel或作業系統,但有人提出這不可能實現。

source: http://en.wikipedia.org/wiki/Hypervisor

source: http://www.lynx.com/whitepaper/the-rise-of-the-type-zero-hypervisor

source: http://www.virtualizationpractice.com/type-0-hypervisor-fact-or-fiction-17159

虛擬化定理

Popek and Goldberg virtualization requirements, 1974

Gerald Popek及Robert Goldberg二人提出的理論至今仍是非常便利的方法去決定計算機架構是否支持虛擬化,也是設計虛擬化計算機架構的導則

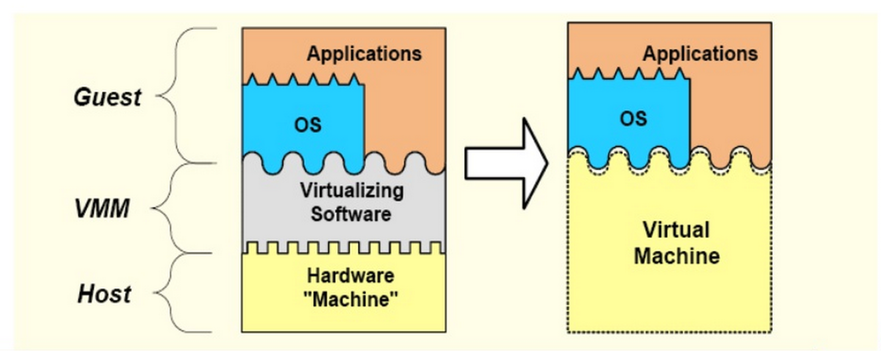

VM定義

- A virtual machine is taken to be an efficient, isolated duplicate of the real machine. It is the environment created by the virtual machine monitor.

VMM定義

- VMM is the piece of software that provides the abstraction of a virtual machine

當分析VMM建立的環境時,需要滿足以下三點性質:

- 等價性 / 忠實(Equivalence / Fidelity)

- 一個在VMM下運行的程式的所有行為應該要和原本直接運行於相同機器上一樣

- The VMM provides an environment for programs which is essentially identical with the original machine

- 資源控制 / 安全性(Resource control / Safety)

- VMM應該要有虛擬資源的完整控制權.

- The VMM is in complete control of system resources.

- 效率性(Efficiency / Performance)

- 常被執行的主要機器指令集部分不應該被VMM干預,而是直接被處理器執行

- It demands that a statistically dominant subset of the virtual processor’s instructions be executed directly by the real processor, with no software intervention by the VMM.

- 等價性 / 忠實(Equivalence / Fidelity)

為了導出Virtualization theorem虛擬化理論,二人把ISA分成三個類型:(Third Generation Computer)

- 優先級指令 (Privileged instructions)

- 必須在擁有足夠的privilege下執行

- 當處理器在user mode的時候,會觸發Trap

- 當處理器在supervisor mode的時候,不會觸發Trap

- Privileged instructions are independent of the virtualization process. They are merely characteristics of the machine which may be determined from reading the principles of operation.

- 指令例子

- Access I/O devices: Poll for IO, perform DMA, catch hardware interrupt

- Manipulate memory management: Set up page tables, load/flush the TLB and CPU caches, etc.

- Configure various “mode bits”: Interrupt priority level, software trap vectors, etc.

- Call halt instruction: Put CPU into low-power or idle state until next interrupt

- 控制敏感指令 (Control sensitive instructions)

- 指令企圖去改變系統資源配置 (也就是先前提到,VMM擁有系統資源完整掌控權)

- 指令在不經過memory trap sequence下影響處理器模式

- It attempts to change the amount of (memory) resources available, or affects the processor mode without going through the memory trap sequence

- 行為敏感指令 (Behavior sensitive instructions)

- 指令根據資源配置(relocation-bound register的內容或處理器的模式)而有不同的行為結果

- Location sensitive: load physical address (IBM 360/ 67 ERA)

- Mode sensitive: move from previous instruction space (DeC PDP-11/45 MVPI)

- 優先級指令 (Privileged instructions)

所以根據定義,ISA如果是sensitive,就是control sensitive或者是behavior sensitive,不然就是innocuous (無害的)。另外,如果sensitive instruction為privileged,VMM則可以被覆寫

- VMM內容

- VMM是個軟體,在此我們稱作control program,在此program中,又包含許多modules

- control program modules可概略分成三種

- Dispatcher

- 可視為control module之上最高等級的control program,Dispatcher決定呼叫哪一個module

- Allocator

- 決定系統資源的分配,有resource table

- 在VMM只有host一個VM的情況下,allocator只需要把VM跟VMM分開

- 在VMM host許多VM的情況下,allocator需要避免同時把相同資源分配給VM

- 在VM環境下執行會改變系統資源的privileged instruction,dispatcher會去啟動allocator

- 例子:reset relocation-bounds register.

- interpreter

- 一個instruction,一個interpreter routine

- 主要功用就是simulate the effect of the instruction

- Dispatcher

- Popek and Goldberg 最後提出的理論

- Theorem 1. 對於傳統的第三代計算機,如果sensitive

instruction為privilege instruction的subset,就可建立VMM

- 更直觀點來說,當sensitive instruction影響到VMM時,會發生trap讓VMM能夠擁有完整的控制權,這樣保證前面提到的資源控制(resource control)

- 原本由OS在kernel mode執行的敏感指令,因OS被移到user mode而無法正常執行,所以需要被trap 給 hypervisor來執行。

- Theorem 2. 一個傳統的第三代計算機是遞迴虛擬的,要符合以下兩個條件

- 他是虛擬的

- 新建立的VMM並沒有時間依賴性(timing dependencies)

- Theorem 1. 對於傳統的第三代計算機,如果sensitive

instruction為privilege instruction的subset,就可建立VMM

論文文獻:http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.141.4815&rep=rep1&type=pdf

Source: http://en.wikipedia.org/wiki/Popek_and_Goldberg_virtualization_requirements

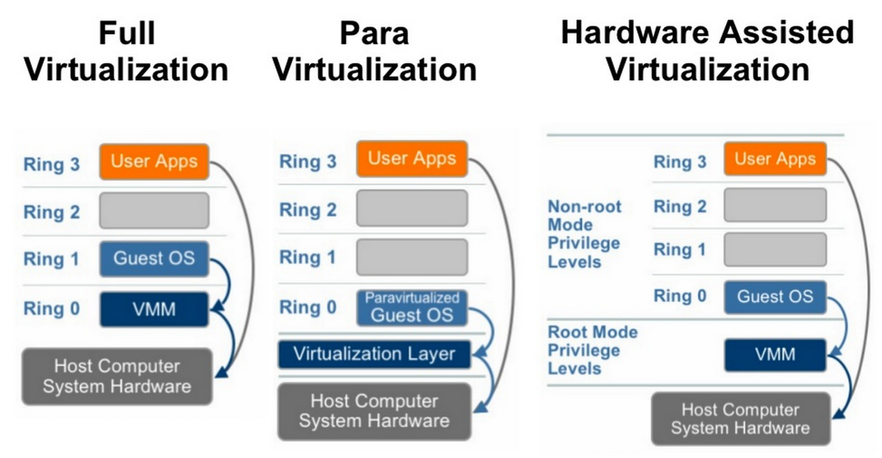

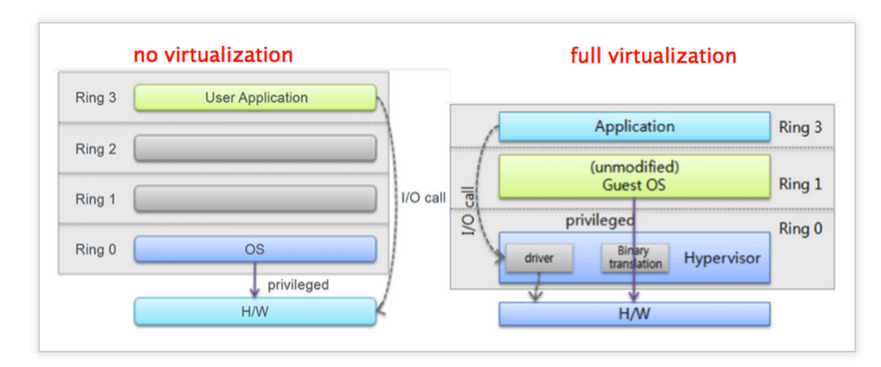

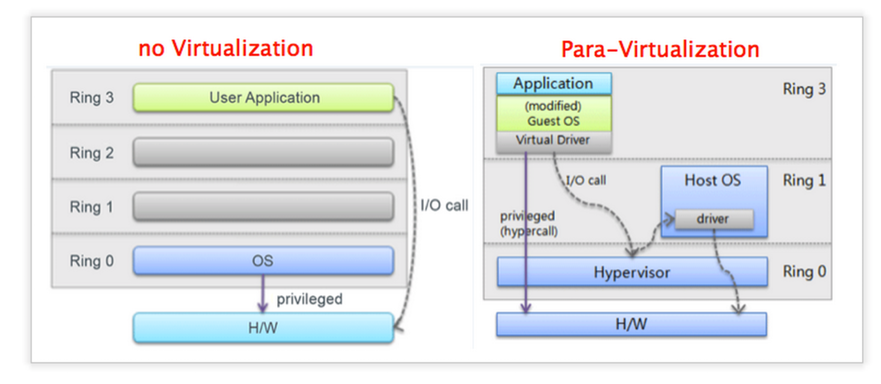

實現虛擬化的方式

- Full-Virtualization

(i.e.VMWare, xen, xvisor, kvm)

- 全部都虛擬化(I/O, interrupt, 指令集等等),不需要硬體或OS的協助,而是透過軟體來模擬虛擬的硬體環境,直接透過Hypervisor實現

- 不用修改OS核心,OS不知自己運行在虛擬化的環境下

- Guest OS privilege operation需被Hypervisor intercept(攔截),之後經過Binary Translation為machine code,以彌補x86的缺陷(only 17 instructions on privileged level),故效能較Para-Virtualization差

- Para-Virtualization (i.e.Xen, xvisor, kvm)

- 必須要修改OS Kernel,並且增加Hypercall

- 由於某些Non-Virtualizable OS指令不能被hypervisor trap,所以Guest OS利用Hypercall呼叫Hypervisor對缺少的指令進行更換,這也是為何要修改OS的核心

- Guest OS知道自己活在虛擬化的環境下,Guest OS知道有其他Guest OS的存在,並且看到的都是真實的硬體,Host OS則不用模擬CPU,Guest OS自行互相調配

- 而在Hypervisor之上,還跑著一個Host OS(Dom0 in Xen),是用來管理Hypervisor的,並且利用native OS的方法管理硬體上的所有I/O driver,所以hypervisor不作硬體模擬,而Guest OS不需要driver,可以直接找Host OS

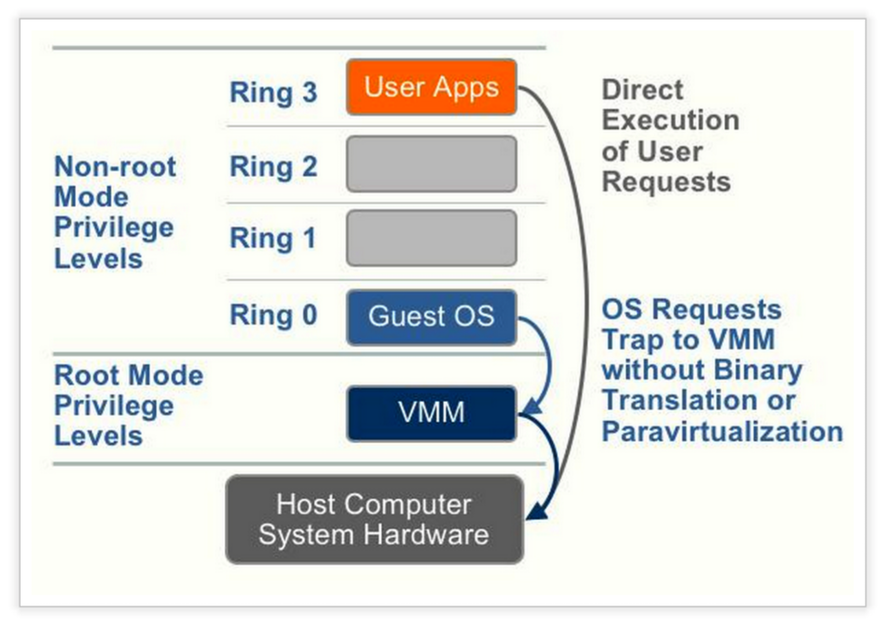

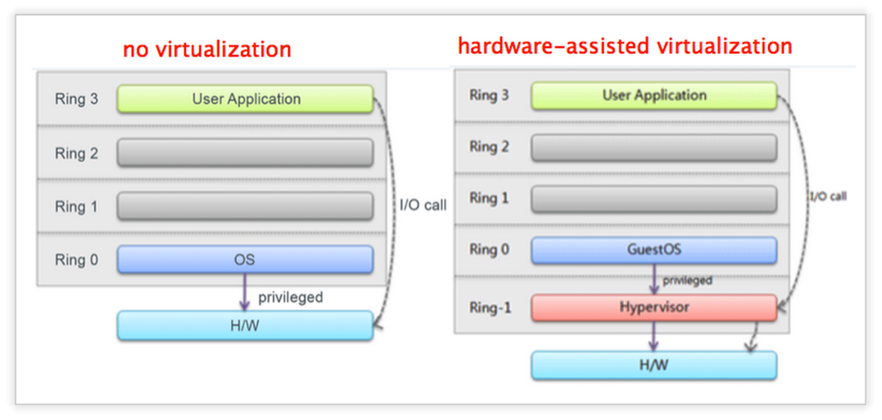

- Hardware-assisted virtualization (Intel VT, AMD-V)

- 需要硬體的支援虛擬化

- 引入新的指令和處理器的運行模式,讓Hypervisor及Guest OS運行在不同的mode下,OS在被監視模式下運行,需要Hypervisor介入時,則透過hardware進行切換

- 讓Guest OS發出的privilege指令被hypervisor攔截到

- Intel VT及AMD-V增加Ring -1並提供Hypervisor x86虛擬化指令,使Hypervisor可以去存取Ring 0的硬體,並把Guest OS放在Ring 0,使Guest OS能在不影響其他guest或host OS的情況下,直接執行Ring 0的指令,所以不需要作Binary Translation也不需要修改OS Kernel

Source: http://tonysuo.blogspot.tw/2013/01/virtualization-3full-para-hardware.html

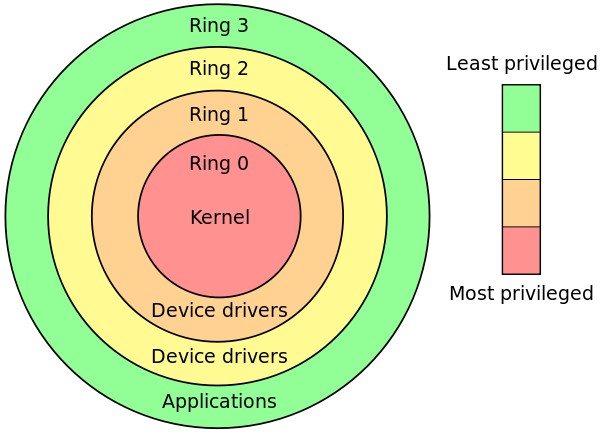

- Ring介紹

- 此機制是為了保護資料或防止錯誤

- x86結構中有四種級別Ring 0 ~ Ring 3

- Ring 0最高,有著OS Kernal

- Ring 3最低,也就是user mode,通常Application都在此層執行

- Ring 1及Ring 2供驅動程式使用,很少用到

- 權力最高不能直接控制權力最低,反之亦然,彼此是利用call gate溝通(提高CPL),但有例外請閱此P14

Source: http://www.csie.ntu.edu.tw/~wcchen/asm98/asm/proj/b85506061/chap3/privilege.html

虛擬化指令(ARM)

問題指令(Problematic Instructions)

- Type I: 在user mode執行會產生未定義的指令異常

- MCR、MRC: 需要依賴協處理器(coprocessor)

- Type II: 在user mode執行會沒有作用

- MSR、MRS: 需要操作系統暫存器

- Type III: 在user mode執行會產生不可預測的行為

- MOVS PC, LR: 返回指令,改變PC並跳回user mode,在user mode執行會產生不可預測的結果

- ARM 的敏感指令:

- 存取協處理器: MRC / MCR / CDP / LDC / STC

- 存取SIMD/VFP 系統暫存器: VMRS / VMSR

- 進入TrustZone 安全狀態: SMC

- 存取 Memory-Mapped I/O: Load/Store instructions from/into memory-mapped I/O locations

- 直接存取CPSR: MRS / MSR / CPS / SRS / RFE / LDM (conditional execution) / DPSPC

- 間接存取CPSR: LDRT / STRT – Load/Store Unprivileged (“As User”)

- 存取Banked Register: LDM / STM

Solutions

- 軟體技術: trap and emulate

- Dynamic Binary Translation

- 把問題指令取代為hypercall以進行trap 及emulate

- Hypercall

- 對type and original instruction bits進行編碼

- trap 到 hypervisor,進行解碼及模擬指令

- Dynamic Binary Translation

- 硬體技術:

- 特權指令轉換(待補)

- MMU強制執行trap

- 虛擬化擴充

記憶體虛擬化(without hardware support)

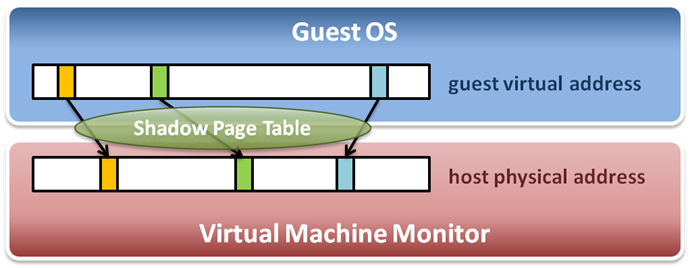

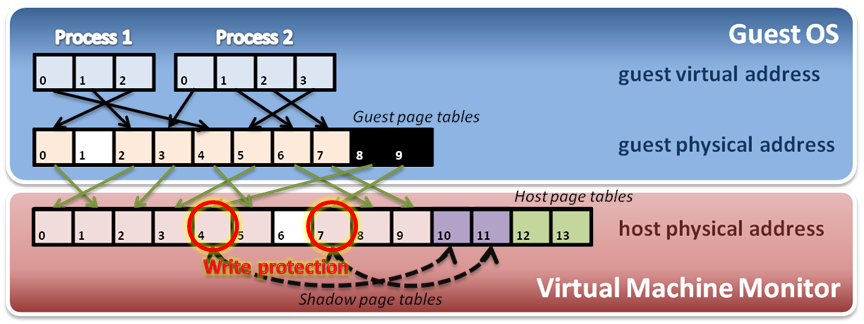

- Shadow page tables:

- Map guest virtual address to host physical address

- Guest OS maintain自己的page table到 guest實體記憶體框架

- hypervisor 把所有的guest實體記憶體框架 map 到host實體記憶體框架

(from System

Virtualization Memory Virtualization - 國立清華大學)

(from System

Virtualization Memory Virtualization - 國立清華大學)

- 為每一個guest page table 建立 Shadow page table

- hypervisor要保護放著guest page table的host frame (from System Virtualization

Memory Virtualization - 國立清華大學)

(from System Virtualization

Memory Virtualization - 國立清華大學)

ARM Virtualization Extensions

可參考:ARMv8#虛擬化 (暫存器待補)

CPU virtualization

ARM 增加運行在Non-secure privilege level 2 的 Hypervisor mode

CPU 虛擬化擴充

- Guest OS kernel執行在EL1,userspace執行在EL0

- 使大部分的敏感指令可以本地執行(native-run)在EL1上而不必trap及emulation

- 而仍需要trap的敏感指令會被trap到EL2 (hypervisor mode HYP)

- Guest OS’s Load/Store

- 會影響其他Guest OS的指令

- Hypervisor Syndrome Register(HSR) 會保存被trapped的指令的資訊,因此hypervisor就能emulate它

/* Initialize Hypervisor Configuration */

INIT_SPIN_LOCK(&arm_priv(vcpu)->hcr_lock);

arm_priv(vcpu)->hcr = (HCR_TSW_MASK |

HCR_TACR_MASK |

HCR_TIDCP_MASK |

HCR_TSC_MASK |

HCR_TWE_MASK |

HCR_TWI_MASK |

HCR_AMO_MASK |

HCR_IMO_MASK |

HCR_FMO_MASK |

HCR_SWIO_MASK |

HCR_VM_MASK);將EL1的敏感指令(MCR、MRC、SMC、WFE、WFI)及中斷(irq、fiq)trap到EL2,並啟動stage 2 address translation

Xvisor instruction emulate

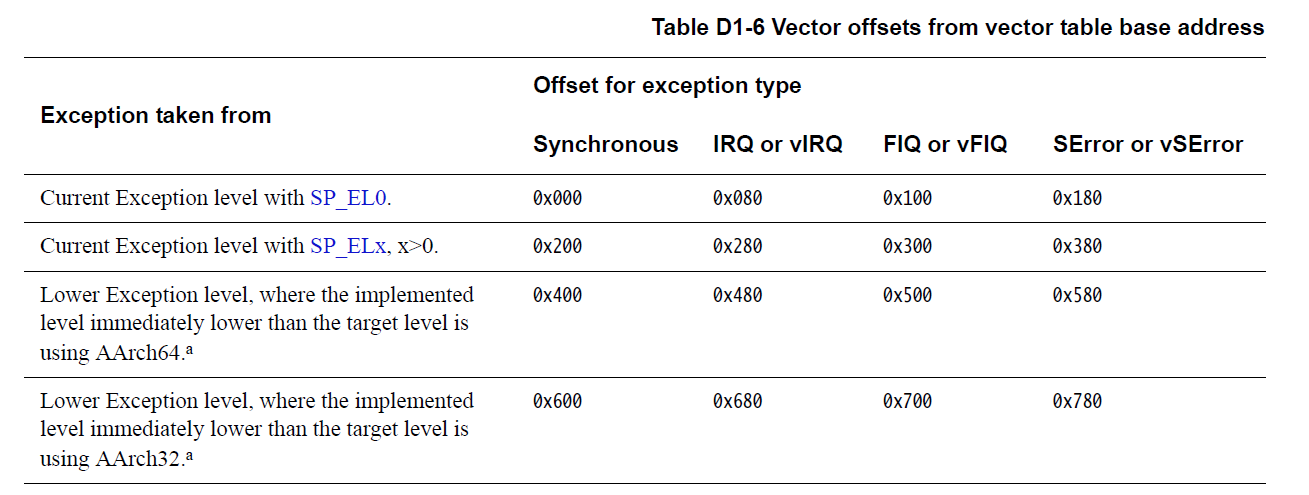

(armv8) cpu_entry.S 內初始化Hyp vector base

/*

* Exception vectors.

*/

.macro ventry label

.align 7

b \label

.endm

.align 11

.globl vectors;

vectors:

ventry hyp_sync_invalid /* Synchronous EL1t */

ventry hyp_irq_invalid /* IRQ EL1t */

ventry hyp_fiq_invalid /* FIQ EL1t */

ventry hyp_error_invalid /* Error EL1t */

ventry hyp_sync /* Synchronous EL1h */

ventry hyp_irq /* IRQ EL1h */

ventry hyp_fiq_invalid /* FIQ EL1h */

ventry hyp_error_invalid /* Error EL1h */

........

EXCEPTION_HANDLER hyp_sync

PUSH_REGS

mov x1, EXC_HYP_SYNC_SPx

CALL_EXCEPTION_CFUNC do_sync

PULL_REGS

/*

* .macro PUSH_REGS

* sub sp, sp, #0x20

* push x28, x29

* push x26, x27

* push x24, x25

* push x22, x23

* ......

* push x0, x1

* add x21, sp, #0x110

* mrs x22, elr_el2

* mrs x23, spsr_el2

* stp x30, x21, [sp, #0xF0]

* stp x22, x23, [sp, #0x100]

*

*

* .macro CALL_EXCEPTION_CFUNC cfunc

* mov x0, sp x0 放下面 arch_regs_t *regs 的參數

* bl \cfunc

* .endm

*/- ESR_EL2, Exception Syndrome Register: 保存跳到EL2的exception的syndrome 資訊(請對照 armv8 syndrome-registe 服用)

cpu_interrupt.c

void do_sync(arch_regs_t *regs, unsigned long mode)

{

.......

esr = mrs(esr_el2);

far = mrs(far_el2);

elr = mrs(elr_el2);

ec = (esr & ESR_EC_MASK) >> ESR_EC_SHIFT;

il = (esr & ESR_IL_MASK) >> ESR_IL_SHIFT;

iss = (esr & ESR_ISS_MASK) >> ESR_ISS_SHIFT;

.......

switch (ec) {

case EC_UNKNOWN: /* 0x00 */

/* We dont expect to get this trap so error */

rc = VMM_EFAIL;

break;

case EC_TRAP_WFI_WFE: /* 0x01 */

/* WFI emulation */

rc = cpu_vcpu_emulate_wfi_wfe(vcpu, regs, il, iss);

break;

case EC_TRAP_MCR_MRC_CP15_A32: /* 0x03 */

/* MCR/MRC CP15 emulation */

rc = cpu_vcpu_emulate_mcr_mrc_cp15(vcpu, regs, il, iss);

break;

.........

break;

case EC_TRAP_HVC_A64: /* 0x16 */

/* HVC emulation for A64 guest */

rc = cpu_vcpu_emulate_hvc64(vcpu, regs, il, iss);

break;

case EC_TRAP_MSR_MRS_SYSTEM: /* 0x18 */

/* MSR/MRS/SystemRegs emulation */

rc = cpu_vcpu_emulate_msr_mrs_system(vcpu, regs, il, iss);

break;

case EC_TRAP_LWREL_INST_ABORT: /* 0x20 */

/* Stage2 instruction abort */

fipa = (mrs(hpfar_el2) & HPFAR_FIPA_MASK) >> HPFAR_FIPA_SHIFT;

fipa = fipa << HPFAR_FIPA_PAGE_SHIFT;

fipa = fipa | (mrs(far_el2) & HPFAR_FIPA_PAGE_MASK);

rc = cpu_vcpu_inst_abort(vcpu, regs, il, iss, fipa);

break;

case EC_TRAP_LWREL_DATA_ABORT: /* 0x24 */

/* Stage2 data abort */

fipa = (mrs(hpfar_el2) & HPFAR_FIPA_MASK) >> HPFAR_FIPA_SHIFT;

fipa = fipa << HPFAR_FIPA_PAGE_SHIFT;

fipa = fipa | (mrs(far_el2) & HPFAR_FIPA_PAGE_MASK);

rc = cpu_vcpu_data_abort(vcpu, regs, il, iss, fipa);

break;最後呼叫cpu_vcpu_emulate.c內相對應的函式做指令模擬。 需要注意到的是再hypervisor上層跑的可能是aarch64也可能是aarch32,因此需要分開處理。

Memory virtualization

請參考: armv8 virtual-memory-system-architecture

ARM 增加 Intermediate Physical Address,使得Guest OS不能直接存取實體位址(physical address)

二階位址轉換 two stage address translation: => 實體位址(physical address)

- 第一階段: 虛擬位址(virtual address) => 中間實體位址(Intermediate

physical address)

- 由Guest OS控制,並認為IPA就是PA

- 第二階段: 中間實體位址(Intermediate physical address) =>

實體位址(physical address)

- 由hypervisor控制

- 第一階段: 虛擬位址(virtual address) => 中間實體位址(Intermediate

physical address)

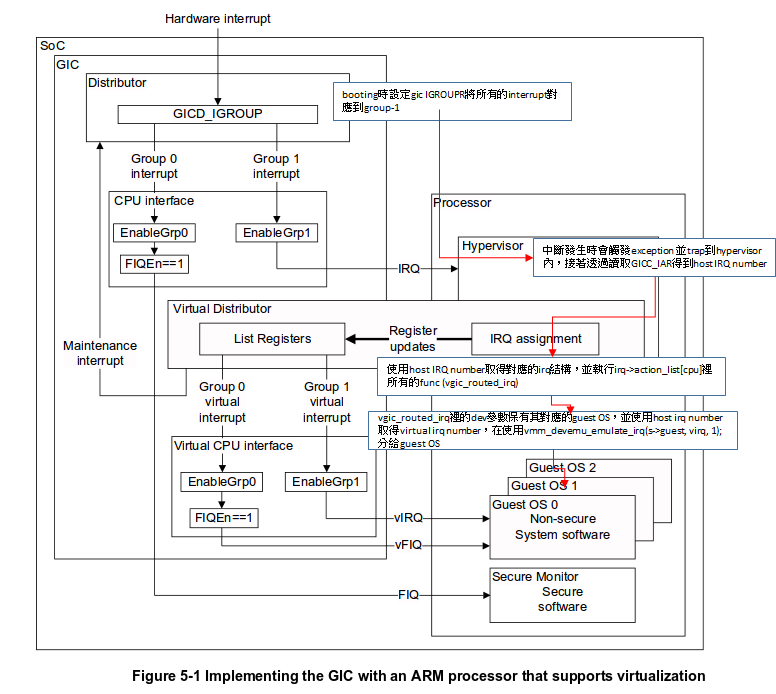

interrupt virtualization

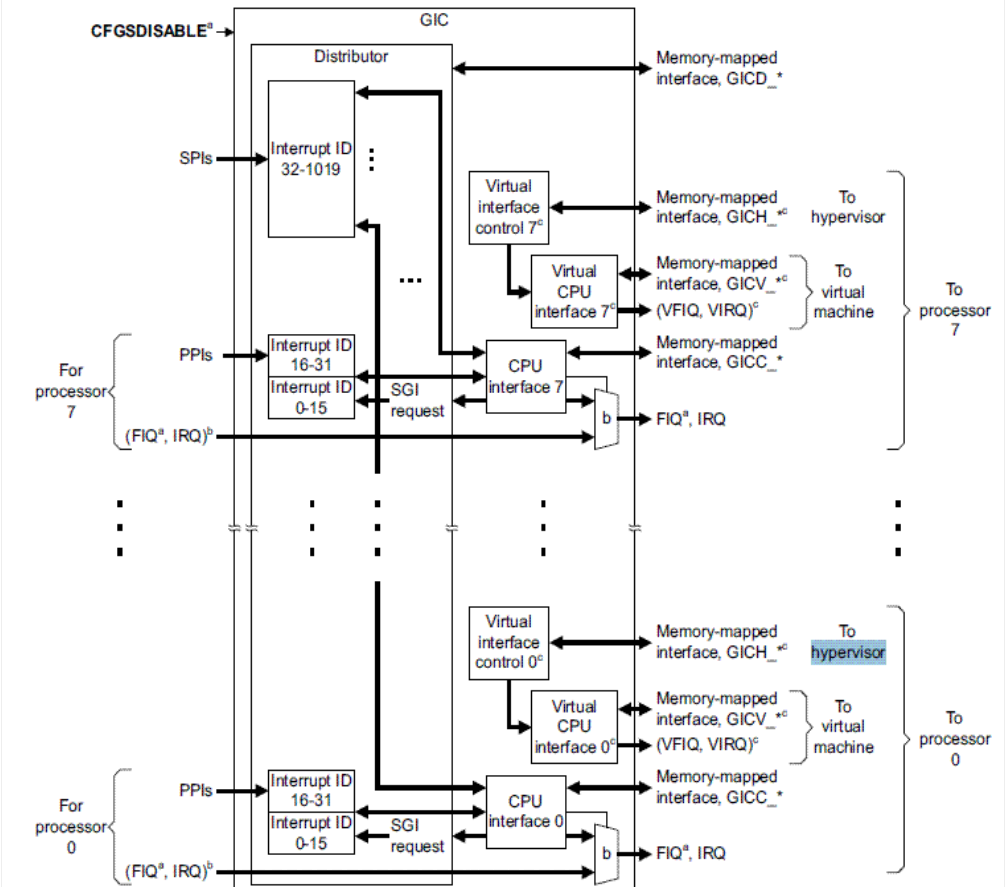

GIC(Generic Interrupt Controller) 包含Interrupt Distributor 和 CPU Interface。

- Interrupt Distributor:

- 可以將不同類型的interrupt安排(route)到該類型的state

- 需在booting time被設定

ARM新增了一個硬體元件Virtual CPU Interface,並透過他提供Virtual Interrupt。如此一來,便不需要去模擬I/O Device,Guest OS也不用trap進Hypervisor便能acknowledge and clear interrupts。不過Hypervisor還是需要模擬Virtual Interrupt Distributor,以提供Guest OS存取trap。

- Virtual Interrupt Distributor:

- 將interrupt分為兩類,分別被安排(route)到Hypervisor或Guest OS的Vector Table

- Virtual CPU interface:

- 協助Hypervisor Designer實作Virtual Interrupt Distributor的硬體

(可參考下方gic配置及vgic)

I/O device virtualization

- ARM 增加 Virtual Generic Interrupt Controller 介面去執行interrupt

virtio

VirtIO 是一些常見的虛擬裝置在paravirtualized hypervisor裡的具象化。一個VirtIO device是依循VirtIO abstraction的paravirtualized device。一般來說,VirtIO device會被guest視為PCI device或MMIO device。

VirtIO specification和hypervisor與architecture是獨立的。VirtIO specification描述不同類型的VirtIO devices之細節和功能。

官方的VirtIO specification可參考這裡。

其他支援VirtIO based paravirtualized devices的hypervisor包含Lguest、KVM和Xen。VirtIO最早是由Rusty Russell開發,用於Lguest的paravirtualization。

在Xvisor裡,VirtIO devices對guest來說是一種PCI/MMIO devices的選項,因此guest OS並不會被強制使用VirtIO。

目前在Xvisor裡有下列幾種類型的VirtIO devices:

- VirtIO Net - (Paravirtualized Network Device)

- VirtIO Block - (Paravirtualized Block Device)

- VirtIO Console - (Paravirtualized Console Device)

大多數的ARM guest已啟用VirtIO device,所以不需額外的準備即可在Xvisor ARM上使用VirtIO。

Figure 1. Device emulation in full virtualization and paravirtualizationenvironments

1. Device emulation in full

virtualization and paravirtualizationenvironments.PNG

Figure 2. Driver abstractions with virtio

2. Driver abstractions with

virtio.PNG

- Front-end driver

- 在Guest OS上

- 具普遍性

- ex: using QEMU

- Back-end driver

- 在Hypervisor上

- 不具普遍性

- 模擬Front-end driver所需的behavior

Virtual Queue:封裝Guest OS所需的指令和資料,為Front-end driver和Back-end driver的溝通媒介,通常採用ring的結構。

Figure 3. High-level architecture of the virtio framework

3. High-level architecture of the

virtio framework.PNG

- block devices (such as disks)

- networkdevices

- PCI emulation

- balloon driver (for dynamically managing guest memory usage)

- console driver

每個Front-end driver都有一個對應的Back-end driver

Figure 4. Object hierarchy of the virtio front end

4. Object hierarchy of the virtio front

end.png

Virtio buffers

- scatter-gather list: each entry in the list representing an address and a length

Virtual Queue’s 5 important functions:

- add_buf

- kick

- get_buf

- enable_cb

- disable_cb

Xvisor

GIC 配置

先看foundation model的手冊 - DGIC_DIST_BASE=0x2c001000 - DGIC_CPU_BASE=0x2c002000

- gic distributor

- gic cpu interface

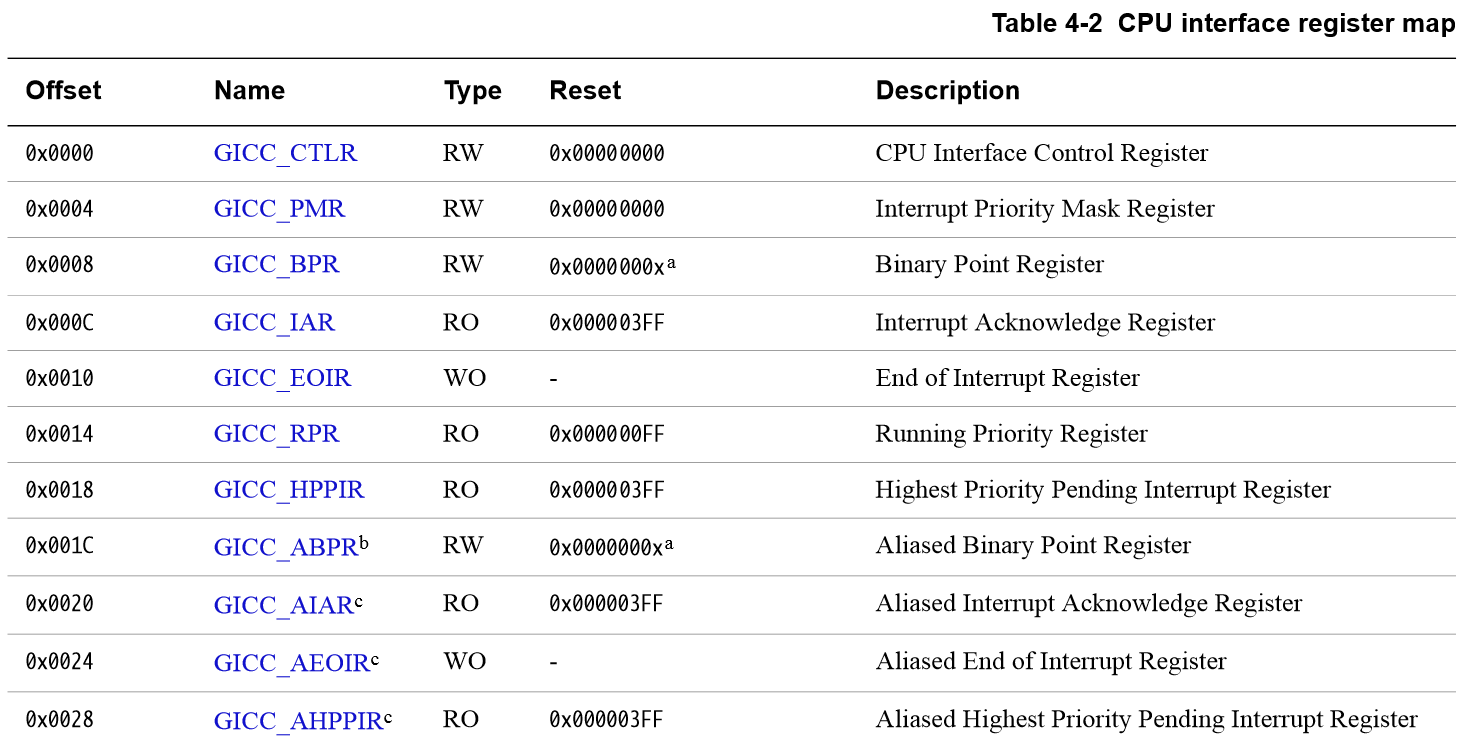

(from GICv2 Architecture Specification P.75)

- booting前的設定

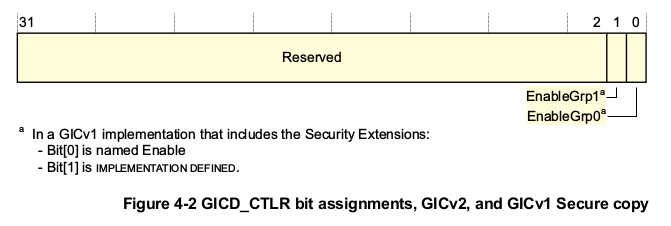

/* GIC Distributor Interface Init */

mrs x4, mpidr_el1

ldr x5, __mpidr_mask

and x4, x4, x5 /* CPU affinity */

__gic_dist_init:

ldr x0, __gic_dist_base /* Dist GIC base */

mov x1, #0 /* non-0 cpus should at least */

cmp x4, xzr /* program IGROUP0 */

bne 1f

mov x1, #3 /* Enable group0 & group1 */

str w1, [x0, #0x00] /* Ctrl Register */

(from GICv2 Architecture Specification)

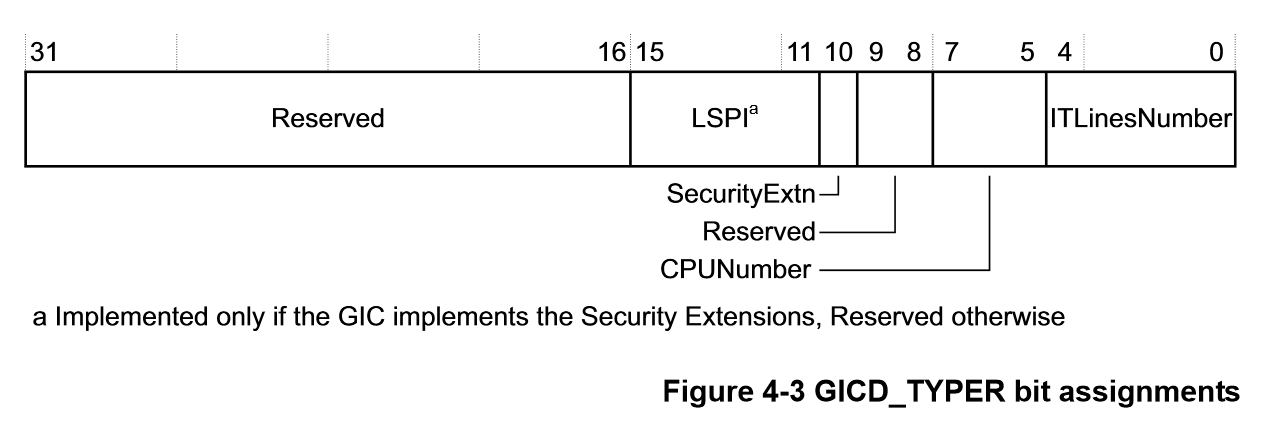

ldr w1, [x0, #0x04] /* Type Register */

1: and x1, x1, #0x1f /* No. of IGROUPn registers */

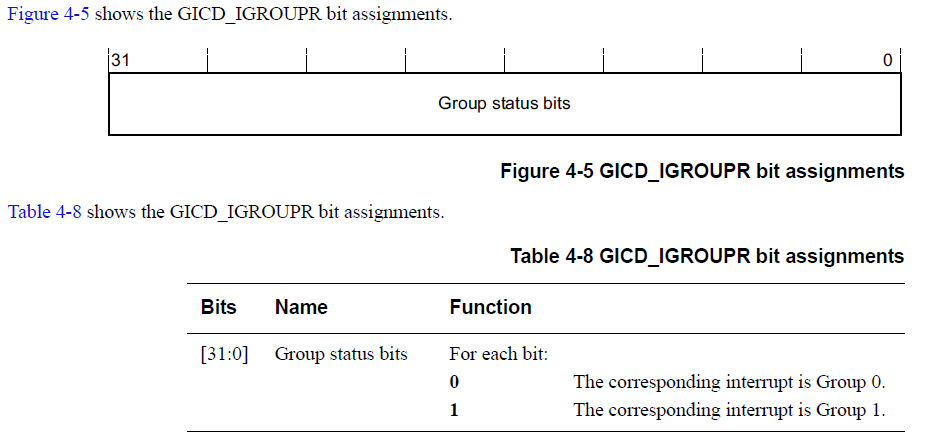

add x2, x0, #0x080 /* IGROUP0 Register */

movn x3, #0 /* All interrupts to group-1 */

2: str w3, [x2], #4

subs x1, x1, #1

bge 2b

If ITLinesNumber=N, the maximum number of interrupts is 32(N+1).

(from GICv2 Architecture Specification P.76)

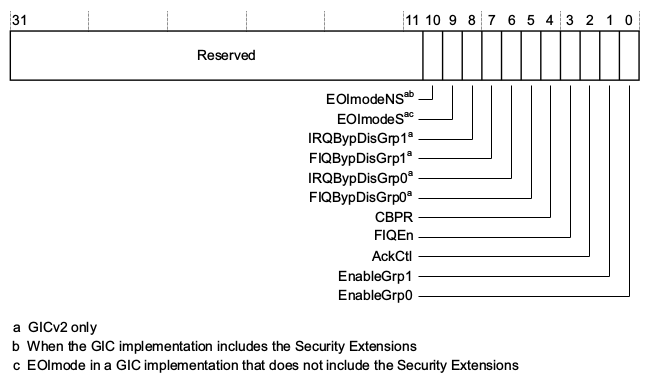

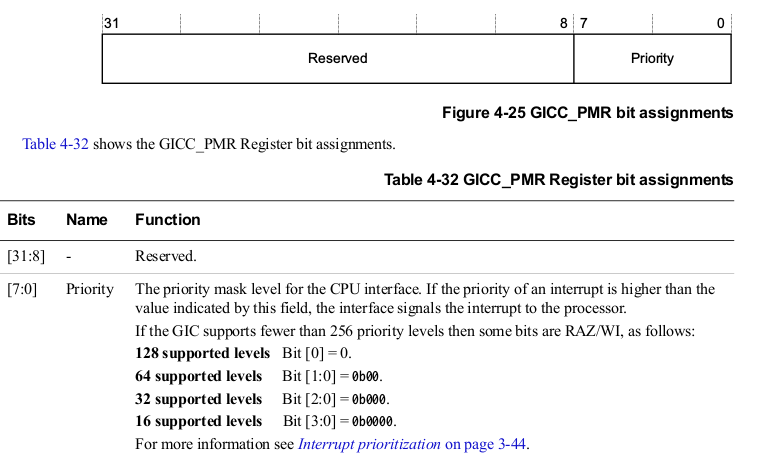

- GICC_CTLR

- GICC_PMR

__gic_cpu_init:

/* GIC Secured CPU Interface Init */

ldr x0, __gic_cpu_base /* GIC CPU base */

mov x1, #0x80

str w1, [x0, #0x4] /* GIC CPU Priority Mask */

mov x1, #0x3 /* Enable group0 & group1 */

str w1, [x0] /* GIC CPU Control */- host irq 初始化

struct vmm_host_irqs_ctrl {

vmm_spinlock_t lock;

struct vmm_host_irq *irq;

u32 (*active)(u32);

const struct vmm_devtree_nodeid *matches;

};

static struct vmm_host_irqs_ctrl hirqctrl;(arm,cortex-a15-gic 為 foundation-v8.dtsi 設定的 gic compatible)

會展開成

__nidtbl struct vmm_devtree_nidtbl_entry __ca15gic = {

.signature = VMM_DEVTREE_NIDTBL_SIGNATURE,

.subsys = "host_irq",

.nodeid.name =" ",

.nodeid.type =" ",

.nodeid.compatible ="arm,cortex-a15-gic",

.nodeid.data = gic_eoimode_init,

}vmm_host_irq 初始化時就會去抓這個node執行gic_eoimode_init:

static int __init gic_devtree_init(struct vmm_devtree_node *node,

struct vmm_devtree_node *parent,

bool eoimode)

{

.........

gic_init_bases(gic_cnt, eoimode, irq, cpu_base, cpu2_base, dist_base);

.......

if (parent) {

if (vmm_devtree_read_u32(node, "parent_irq", &irq)) {

irq = 1020;

}

gic_cascade_irq(gic_cnt, irq);

} else {

/* 會進到這邊 */

vmm_host_irq_set_active_callback(gic_active_irq);

}

} static u32 gic_active_irq(u32 cpu_irq_nr)

{

u32 ret;

ret = gic_read(gic_data[0].cpu_base + GIC_CPU_INTACK) & 0x3FF;

....

return ret;

}- 對應到gic cpu interface的 Interrupt Acknowledge Register GICC_IAR

- Purpose: The processor reads this register to obtain the interrupt ID of the signaled interrupt. This read acts as an acknowledge for the interrupt.

static int __init gic_init_bases(u32 gic_nr, bool eoimode, u32 irq_start, virtual_addr_t cpu_base, virtual_addr_t cpu2_base, virtual_addr_t dist_base) { ........ gic_dist_init(gic); gic_cpu_init(gic); } static void __init gic_dist_init(struct gic_chip_data *gic) { /* Disable IRQ distribution */ gic_write(0, base + GIC_DIST_CTRL); .... /* * Disable all interrupts. */ for (i = 0; i < gic->max_irqs; i += 32) { gic_write(0xffffffff, base + GIC_DIST_ENABLE_CLEAR + i * 4 / 32); } /* * Setup the Host IRQ subsystem. * Note: We handle all interrupts including SGIs and PPIs via C code. * The Linux kernel handles pheripheral interrupts via C code and * SGI/PPI via assembly code. */ for (i = gic->irq_start; i < (gic->irq_start + gic->max_irqs); i++) { vmm_host_irq_set_chip(i, &gic_chip); vmm_host_irq_set_chip_data(i, gic); vmm_host_irq_set_handler(i, vmm_handle_fast_eoi); /* Mark SGIs and PPIs as per-CPU IRQs */ if (i < 32) { vmm_host_irq_mark_per_cpu(i); } } /* Enable IRQ distribution */ gic_write(1, base + GIC_DIST_CTRL); }

設定hirqctrl裡的irq們(for 的 i 即為host irq number) ,並set handelr。

void vmm_handle_fast_eoi(struct vmm_host_irq *irq, u32 cpu, void *data)

{

irq_flags_t flags;

struct vmm_host_irq_action *act;

list_for_each_entry(act, &irq->action_list[cpu], head) {

if (act->func(irq->num, act->dev) == VMM_IRQ_HANDLED) {

break;

}

}

if (irq->chip && irq->chip->irq_eoi) {

irq->chip->irq_eoi(irq);

}

}- 執行action_list裡的action

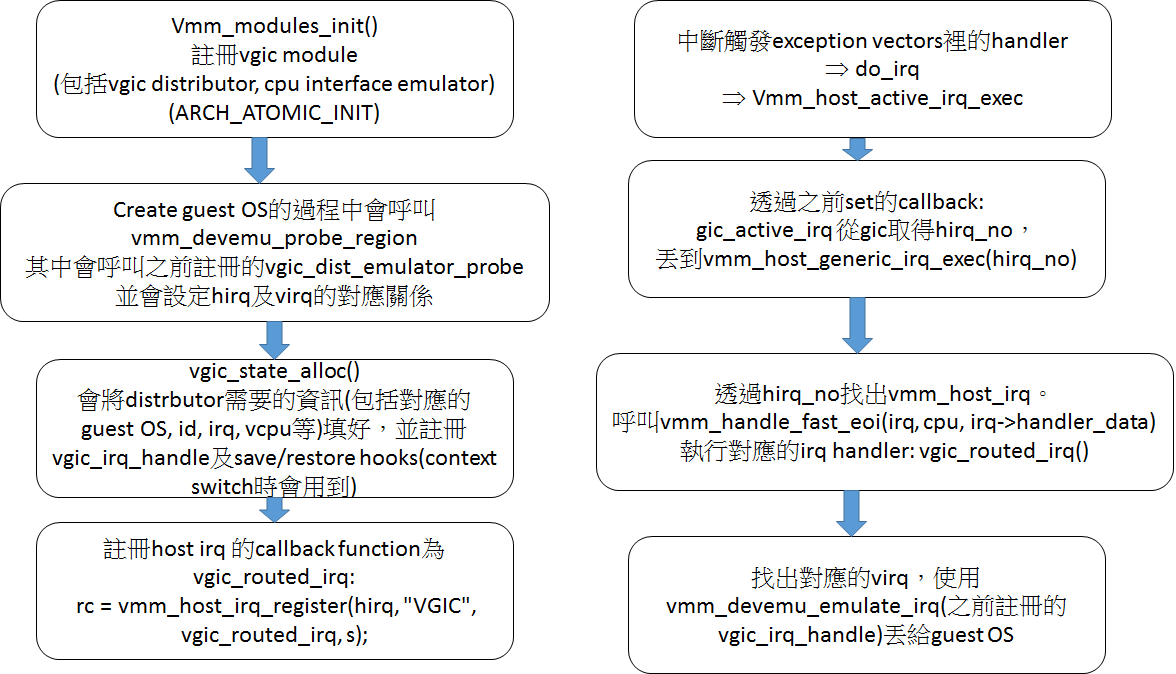

Virtual gic (vgic)

vgic.c 提供vgic的實作(其中使用module及devtree):

static struct vmm_emulator vgic_dist_emulator = {

.name = "vgic-dist",

.match_table = vgic_dist_emuid_table,

.endian = VMM_DEVEMU_LITTLE_ENDIAN,

.probe = vgic_dist_emulator_probe,

.remove = vgic_dist_emulator_remove,

.reset = vgic_dist_emulator_reset,

......

};

static struct vmm_emulator vgic_cpu_emulator = {

.name = "vgic-cpu",

.match_table = vgic_cpu_emuid_table,

.endian = VMM_DEVEMU_LITTLE_ENDIAN,

.probe = vgic_cpu_emulator_probe,

.remove = vgic_cpu_emulator_remove,

.reset = vgic_cpu_emulator_reset,

};

static int __init vgic_emulator_init(void)

{

int i, rc;

struct vmm_devtree_node *node;

vgich.avail = FALSE;

.......

/* 取得devtree 內gic reg的位置及GIC Maintanence IRQ */

.......

/* 註冊vgic distributor 及 vgic cpu interface*/

rc = vmm_devemu_register_emulator(&vgic_dist_emulator);

rc = vmm_devemu_register_emulator(&vgic_cpu_emulator);

......

}

VMM_DECLARE_MODULE(MODULE_DESC,

MODULE_AUTHOR,

MODULE_LICENSE,

MODULE_IPRIORITY,

MODULE_INIT,

MODULE_EXIT);在create guest OS,初始化其address space時,就會使用之前註冊的probe來設定distributor及cpu interface。 其中 vgic_dist_emulator_probe會讀guest device tree的內容來設定vgic_state:

static int vgic_dist_emulator_probe(struct vmm_guest *guest,

struct vmm_emudev *edev,

const struct vmm_devtree_nodeid *eid)

{

int rc;

u32 i, virq, hirq, len, parent_irq, num_irq;

struct vgic_guest_state *s;

.......

rc = vmm_devtree_read_u32(edev->node, "parent_irq", &parent_irq);

if (rc) {

return rc;

}

if (vmm_devtree_read_u32(edev->node, "num_irq", &num_irq)) {

num_irq = VGIC_MAX_NIRQ;

}

if (num_irq > VGIC_MAX_NIRQ) {

num_irq = VGIC_MAX_NIRQ;

}

s = vgic_state_alloc(edev->node->name,

guest, guest->vcpu_count,

num_irq, parent_irq);

len = vmm_devtree_attrlen(edev->node, "host2guest") / 8;

for (i = 0; i < len; i++) {

......

/** Register function callback for hirq(host irq) */

rc = vmm_host_irq_register(hirq, "VGIC",

vgic_routed_irq, s);

......

} static struct vgic_guest_state *vgic_state_alloc(const char *name,

struct vmm_guest *guest,

u32 num_cpu,

u32 num_irq,

u32 parent_irq)

{

u32 i;

struct vmm_vcpu *vcpu;

struct vgic_guest_state *s = NULL;

/* Alloc VGIC state */

s = vmm_zalloc(sizeof(struct vgic_guest_state));

s->guest = guest;

s->num_cpu = num_cpu;

s->num_irq = num_irq;

s->id[0] = 0x90 /* id0 */;

s->id[1] = 0x13 /* id1 */;

s->id[2] = 0x04 /* id2 */;

s->id[3] = 0x00 /* id3 */;

s->id[4] = 0x0d /* id4 */;

s->id[5] = 0xf0 /* id5 */;

s->id[6] = 0x05 /* id6 */;

s->id[7] = 0xb1 /* id7 */;

for (i = 0; i < VGIC_NUM_IRQ(s); i++) {

VGIC_SET_HOST_IRQ(s, i, UINT_MAX);

}

for (i = 0; i < VGIC_NUM_CPU(s); i++) {

s->vstate[i].vcpu = vmm_manager_guest_vcpu(guest, i);

s->vstate[i].parent_irq = parent_irq;

}

INIT_SPIN_LOCK(&s->dist_lock);

/* Register guest irq handler */

for (i = 0; i < VGIC_NUM_IRQ(s); i++) {

vmm_devemu_register_irq_handler(guest, i,

name, vgic_irq_handle, s);

}

/* setup 之後context switch時使用的save, restore function*/

/* Setup save/restore hooks */

list_for_each_entry(vcpu, &guest->vcpu_list, head) {

arm_vgic_setup(vcpu,

vgic_save_vcpu_context,

vgic_restore_vcpu_context, s);

}

return s;

} /* Handle host-to-guest routed IRQ generated by device */

static vmm_irq_return_t vgic_routed_irq(int irq_no, void *dev)

{

........

/* Determine guest IRQ from host IRQ */

virq = (u32)arch_atomic_read(&vgich.host2guest_irq[hirq]);

if (VGIC_MAX_NIRQ <= virq) {

goto done;

}

/* Lower the interrupt level.

* This will clear previous interrupt state.

*/

rc = vmm_devemu_emulate_irq(s->guest, virq, 0);

/* Elevate the interrupt level.

* This will force interrupt triggering.

*/

rc = vmm_devemu_emulate_irq(s->guest, virq, 1);

done:

return VMM_IRQ_HANDLED;

} /* Process IRQ asserted by device emulation framework */

static void vgic_irq_handle(u32 irq, int cpu, int level, void *opaque)

{

.........

if (irq < 32) {

/* In case of PPIs and SGIs */

cm = target = (1 << cpu);

} else {

/* In case of SGIs */

cm = VGIC_ALL_CPU_MASK(s);

target = VGIC_TARGET(s, irq);

for (cpu = 0; cpu < VGIC_NUM_CPU(s); cpu++) {

if (target & (1 << cpu)) {

break;

}

}

if (VGIC_NUM_CPU(s) <= cpu) {

vmm_spin_unlock_irqrestore_lite(&s->dist_lock, flags);

return;

}

}

/* Find out VCPU pointer */

vs = &s->vstate[cpu];

/* If level not changed then skip */

if (level == VGIC_TEST_LEVEL(s, irq, cm)) {

goto done;

}

/* Update IRQ state */

if (level) {

VGIC_SET_LEVEL(s, irq, cm);

if (VGIC_TEST_ENABLED(s, irq, cm)) {

VGIC_SET_PENDING(s, irq, target);

irq_pending = TRUE;

}

} else {

VGIC_CLEAR_LEVEL(s, irq, cm);

}

/* Directly updating VGIC HW for current VCPU */

if (vs->vcpu == vmm_scheduler_current_vcpu()) {

/* The VGIC HW state may have changed when the

* VCPU was running hence, sync VGIC VCPU state.

*/

__vgic_sync_vcpu_hwstate(s, vs);

/* Flush IRQ state change to VGIC HW */

if (irq_pending) {

__vgic_flush_vcpu_hwstate_irq(s, vs, irq);

}

}

done:

........

/* Forcefully resume VCPU if waiting for IRQ */

if (irq_pending) {

vmm_vcpu_irq_wait_resume(vs->vcpu);

}

}

device emulator(以uart為例)

timer 及 time event

MMU

可參考 AJ NOTE

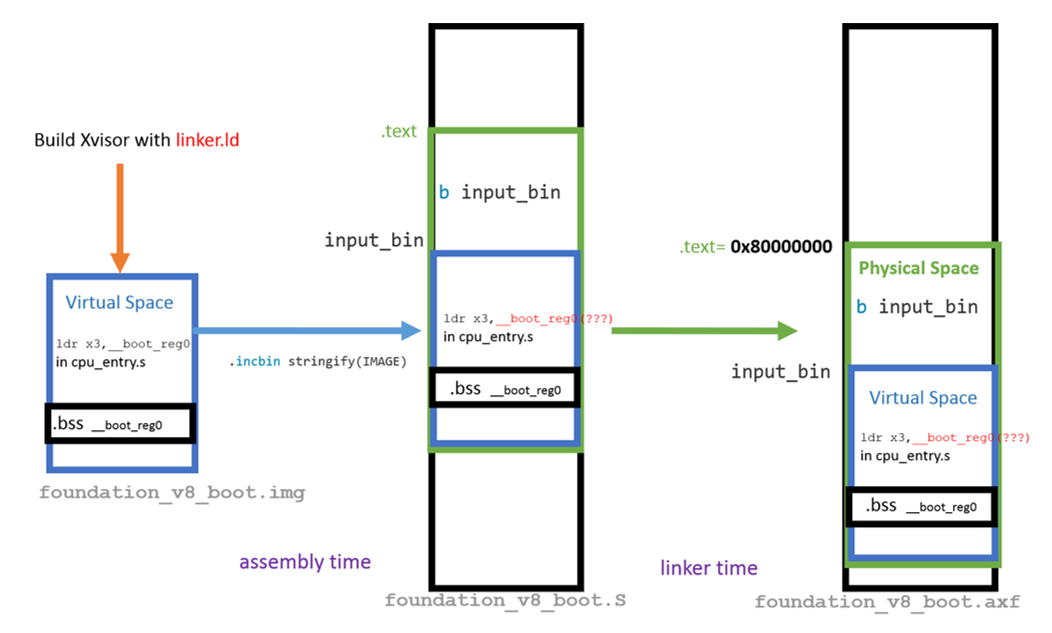

- 需要在MMU啟動前在virtual space下啟動Xvisor

- 在assembly time時, 把img加入至.text,使用.incbin

_start_mmu_init:

/* Setup SP as-per load address */

ldr x0, __hvc_stack_end

mov sp, x0

sub sp, sp, x6

add sp, sp, x4

.........

bl _setup_initial_ttbl初始化translation table * mmu_lpae_entry_ttbl.c

void __attribute__ ((section(".entry")))

_setup_initial_ttbl(virtual_addr_t load_start, virtual_addr_t load_end,

virtual_addr_t exec_start, virtual_addr_t exec_end)

{

..........

lpae_entry.ttbl_base = to_load_pa((virtual_addr_t)&def_ttbl); /* def_ttbl之後要放到 ttbr0_el2(Translation Base Register) 裡*/

lpae_entry.next_ttbl = (u64 *)lpae_entry.ttbl_base;

..........

/* Map physical = logical

* Note: This mapping is using at boot time only

*/

__setup_initial_ttbl(&lpae_entry, load_start, load_end, load_start,

AINDEX_NORMAL_WB, TRUE);

/* Map to logical addresses which are

* covered by read-only linker sections

* Note: This mapping is used at runtime

*/

SETUP_RO_SECTION(lpae_entry, text);

SETUP_RO_SECTION(lpae_entry, init);

SETUP_RO_SECTION(lpae_entry, cpuinit);

SETUP_RO_SECTION(lpae_entry, spinlock);

SETUP_RO_SECTION(lpae_entry, rodata);

/* Map rest of logical addresses which are

* not covered by read-only linker sections

* Note: This mapping is used at runtime

*/

__setup_initial_ttbl(&lpae_entry, exec_start, exec_end, load_start,

AINDEX_NORMAL_WB, TRUE);

}

void __attribute__ ((section(".entry")))

__setup_initial_ttbl(struct mmu_lpae_entry_ctrl *lpae_entry,

virtual_addr_t map_start, virtual_addr_t map_end,

virtual_addr_t pa_start, u32 aindex, bool writeable)

{

........

u64 *ttbl;

/* align start addresses */

map_start &= TTBL_L3_MAP_MASK; /* 0xFFFFFFFFFFFFF000ULL 後面12的bit 直接map 到 output*/

pa_start &= TTBL_L3_MAP_MASK;

page_addr = map_start;

while (page_addr < map_end) {

/* Setup level1 table */

ttbl = (u64 *) lpae_entry->ttbl_base;

index = (page_addr & TTBL_L1_INDEX_MASK) >> TTBL_L1_INDEX_SHIFT;

if (ttbl[index] & TTBL_VALID_MASK) {

/* Find level2 table */

ttbl =

(u64 *) (unsigned long)(ttbl[index] &

TTBL_OUTADDR_MASK);

} else {

/* Allocate new level2 table */

if (lpae_entry->ttbl_count == TTBL_INITIAL_TABLE_COUNT) {

while (1) ; /* No initial table available */

}

for (i = 0; i < TTBL_TABLE_ENTCNT; i++) {

lpae_entry->next_ttbl[i] = 0x0ULL;

}

lpae_entry->ttbl_tree[lpae_entry->ttbl_count] =

((virtual_addr_t) ttbl -

lpae_entry->ttbl_base) >> TTBL_TABLE_SIZE_SHIFT;

lpae_entry->ttbl_count++;

ttbl[index] |=

(((virtual_addr_t) lpae_entry->next_ttbl) &

TTBL_OUTADDR_MASK);

ttbl[index] |= (TTBL_TABLE_MASK | TTBL_VALID_MASK);

ttbl = lpae_entry->next_ttbl;

lpae_entry->next_ttbl += TTBL_TABLE_ENTCNT;

}

/* Setup level2 table */

index = (page_addr & TTBL_L2_INDEX_MASK) >> TTBL_L2_INDEX_SHIFT;

if (ttbl[index] & TTBL_VALID_MASK) {

/* Find level3 table */

ttbl =

(u64 *) (unsigned long)(ttbl[index] &

TTBL_OUTADDR_MASK);

} else {

/* Allocate new level3 table */

......

}

/* Setup level3 table */

index = (page_addr & TTBL_L3_INDEX_MASK) >> TTBL_L3_INDEX_SHIFT;

if (!(ttbl[index] & TTBL_VALID_MASK)) {

/* Update level3 table */

.......

}

/* Point to next page */

page_addr += TTBL_L3_BLOCK_SIZE;

}

} /* Update the httbr value */

ldr x1, __httbr_set_addr

sub x1, x1, x6

add x1, x1, x4

ldr x0, [x1]

sub x0, x0, x6

add x0, x0, x4

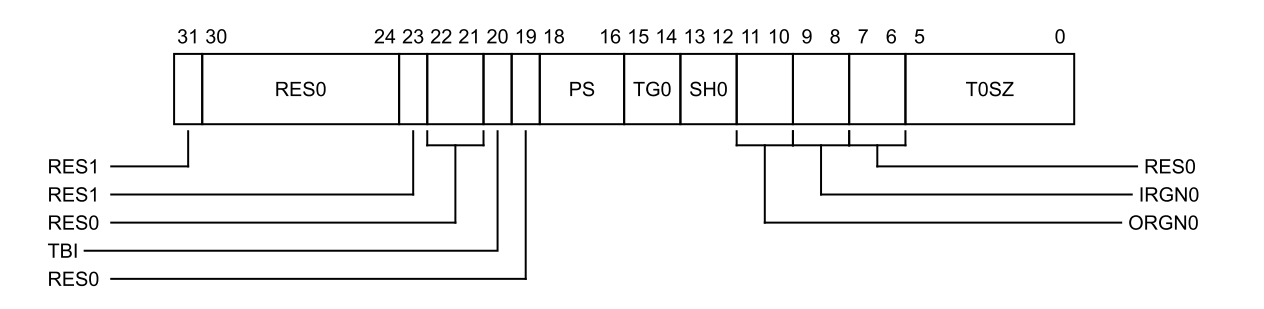

str x0, [x1]TCR_EL2, Translation Control Register (EL2)

/* Setup Hypervisor Translation Control Register */

ldr x0, __htcr_set

msr tcr_el2, x0

- __htcr_set: (TCR_T0SZ_VAL(39) | TCR_PS_40BITS | (0x3 << TCR_SH0_SHIFT) | (0x1 << TCR_ORGN0_SHIFT) | (0x1 << TCR_IRGN0_SHIFT))

- TCR_T0SZ_VAL(39) = (64-39) & 0x3f

- The size offset of the memory region addressed by TTBR0_EL2. The region size is 2^64-T0SZ bytes.(將 region size 設為 2^39 bytes)

- Initial lookup level為level 1

- TCR_PS_40BITS = 2 << 16: Physical Address Size 設為40 bits, 1 TB。

- TCR_SH0_SHIFT = 12; 0x3: Shareability attribute for memory associated: Inner Shareable

- TCR_ORGN0_SHIFT = 10; 0x1: Outer cacheability attribute for memory associated: Write-Back Write-Allocate Cacheable

- TCR_IRGN0_SHIFT = 8; 0x1: Inner cacheability attribute for memory associated: Write-Back Write-Allocate Cacheable

- [Write-Back Write-Allocate](http://upload.wikimedia.org/wikipedia/commons/c/c2/Write-back_with_write-allocation.svg) /* Setup Hypervisor Translation Base Register */

ldr x0, __httbr_set /* def_ttbl 的位置 */

msr ttbr0_el2, x0scheduler

context switch

void arch_vcpu_switch(struct vmm_vcpu *tvcpu,

struct vmm_vcpu *vcpu,

arch_regs_t *regs)

{

u32 ite;

irq_flags_t flags;

/* Save user registers & banked registers */

if (tvcpu) {

arm_regs(tvcpu)->pc = regs->pc;

arm_regs(tvcpu)->lr = regs->lr;

arm_regs(tvcpu)->sp = regs->sp;

for (ite = 0; ite < CPU_GPR_COUNT; ite++) {

arm_regs(tvcpu)->gpr[ite] = regs->gpr[ite];

}

arm_regs(tvcpu)->pstate = regs->pstate;

if (tvcpu->is_normal) {

/* Update last host CPU */

arm_priv(tvcpu)->last_hcpu = vmm_smp_processor_id();

/* Save VGIC context */

arm_vgic_save(tvcpu);

/* Save sysregs context */

cpu_vcpu_sysregs_save(tvcpu);

/* Save VFP and SIMD context */

cpu_vcpu_vfp_save(tvcpu);

/* Save generic timer */

if (arm_feature(tvcpu, ARM_FEATURE_GENERIC_TIMER)) {

generic_timer_vcpu_context_save(tvcpu,

arm_gentimer_context(tvcpu));

}

}

}

/* Restore user registers & special registers */

regs->pc = arm_regs(vcpu)->pc;

regs->lr = arm_regs(vcpu)->lr;

regs->sp = arm_regs(vcpu)->sp;

for (ite = 0; ite < CPU_GPR_COUNT; ite++) {

regs->gpr[ite] = arm_regs(vcpu)->gpr[ite];

}

regs->pstate = arm_regs(vcpu)->pstate;

if (vcpu->is_normal) {

/* Restore hypervisor context */

vmm_spin_lock_irqsave(&arm_priv(vcpu)->hcr_lock, flags);

msr(hcr_el2, arm_priv(vcpu)->hcr);

vmm_spin_unlock_irqrestore(&arm_priv(vcpu)->hcr_lock, flags);

msr(cptr_el2, arm_priv(vcpu)->cptr);

msr(hstr_el2, arm_priv(vcpu)->hstr);

/* Restore Stage2 MMU context */

mmu_lpae_stage2_chttbl(vcpu->guest->id,

arm_guest_priv(vcpu->guest)->ttbl);

/* Restore generic timer */

if (arm_feature(vcpu, ARM_FEATURE_GENERIC_TIMER)) {

generic_timer_vcpu_context_restore(vcpu,

arm_gentimer_context(vcpu));

}

/* Restore VFP and SIMD context */

cpu_vcpu_vfp_restore(vcpu);

/* Restore sysregs context */

cpu_vcpu_sysregs_restore(vcpu);

/* Restore VGIC context */

arm_vgic_restore(vcpu);

/* Flush TLB if moved to new host CPU */

if (arm_priv(vcpu)->last_hcpu != vmm_smp_processor_id()) {

/* Invalidate all guest TLB enteries because

* we might have stale guest TLB enteries from

* our previous run on new_hcpu host CPU

*/

inv_tlb_guest_allis();

/* Ensure changes are visible */

dsb();

isb();

}

}

/* Clear exclusive monitor */

clrex();

}- tlb: inv_tlb_guest_allis(); 若下個排到的vcpu 其之前的host

cpu與現在的不同,那他所保有的TLB

enteries就可能是舊的,因此就要invalidate tlb entries。

- tlbi alle1is: Invalidate all EL1&0 regime stage 1 and 2 TLB entries on all PEs in the same Inner Shareable domain.

#define inv_tlb_guest_allis() asm volatile("tlbi alle1is\n\t" \

"dsb sy\n\t" \

"isb\n\t" \

::: "memory", "cc") 初始化流程

首先vmm_main.c呼叫 int __cpuinit vmm_scheduler_init(void),並初始化timer event

int __cpuinit vmm_scheduler_init(void){

....

/* Initialize timer events (Per Host CPU) */

INIT_TIMER_EVENT(&schedp->ev, &scheduler_timer_event, schedp);

INIT_TIMER_EVENT(&schedp->sample_ev,

&scheduler_sample_event, schedp);

....

/* Start timer events */

vmm_timer_event_start(&schedp->ev, 0);

vmm_timer_event_start(&schedp->sample_ev, SAMPLE_EVENT_PERIOD);

....INIT_TIMER_EVENT會呼叫下列函式將(&schedp->ev) handler設成下列函式

static void scheduler_timer_event(struct vmm_timer_event *ev)

{

struct vmm_scheduler_ctrl *schedp = &this_cpu(sched);

if (schedp->irq_regs) {

vmm_scheduler_switch(schedp, schedp->irq_regs);

}

}執行 vmm_scheduler_switch,schedp->current_vcpu 為正在執行的vcpu vcpu->preempt_count //不斷選擇下一個event進行排程

static void vmm_scheduler_switch(struct vmm_scheduler_ctrl *schedp,

arch_regs_t *regs) // arch_reg 只有在user mode 被更新

{

struct vmm_vcpu *vcpu = schedp->current_vcpu;

if (!regs) {

/* This should never happen !!! */

vmm_panic("%s: null pointer to regs.\n", __func__);

}

if (vcpu) {

if (!vcpu->preempt_count) {

vmm_scheduler_next(schedp, &schedp->ev, regs);

} else {

vmm_timer_event_restart(&schedp->ev);

}

} else {

vmm_scheduler_next(schedp, &schedp->ev, regs);

}

}進入 vmm_scheduler_next(schedp, &schedp->ev, regs),分為first cheduling & normail scheduling 這裡會處理完目前行程並再次呼叫 vmm_timer_event_start(ev, next_time_slice)進入下一個timer_event

static void vmm_scheduler_next(struct vmm_scheduler_ctrl *schedp,

struct vmm_timer_event *ev,

arch_regs_t *regs)

{

/* 紀錄current state & timestamp*/

.....

u64 next_time_slice = VMM_VCPU_DEF_TIME_SLICE;

u64 tstamp = vmm_timer_timestamp();

......

/* First time scheduling */

if (!current) {

rc = rq_dequeue(schedp, &next, &next_time_slice);

vmm_write_lock_irqsave_lite(&next->sched_lock, nf);

}

arch_vcpu_switch(NULL, next, regs);

next->state_ready_nsecs += tstamp - next->state_tstamp;

arch_atomic_write(&next->state, VMM_VCPU_STATE_RUNNING);

next->state_tstamp = tstamp;

schedp->current_vcpu = next;

schedp->current_vcpu_irq_ns = schedp->irq_process_ns;

/*下一個timer_event開始 */

vmm_timer_event_start(ev, next_time_slice);

vmm_write_unlock_irqrestore_lite(&next->sched_lock, nf);

return;

}

在不同Arm架構下的虛擬化機制

without virtualization extension

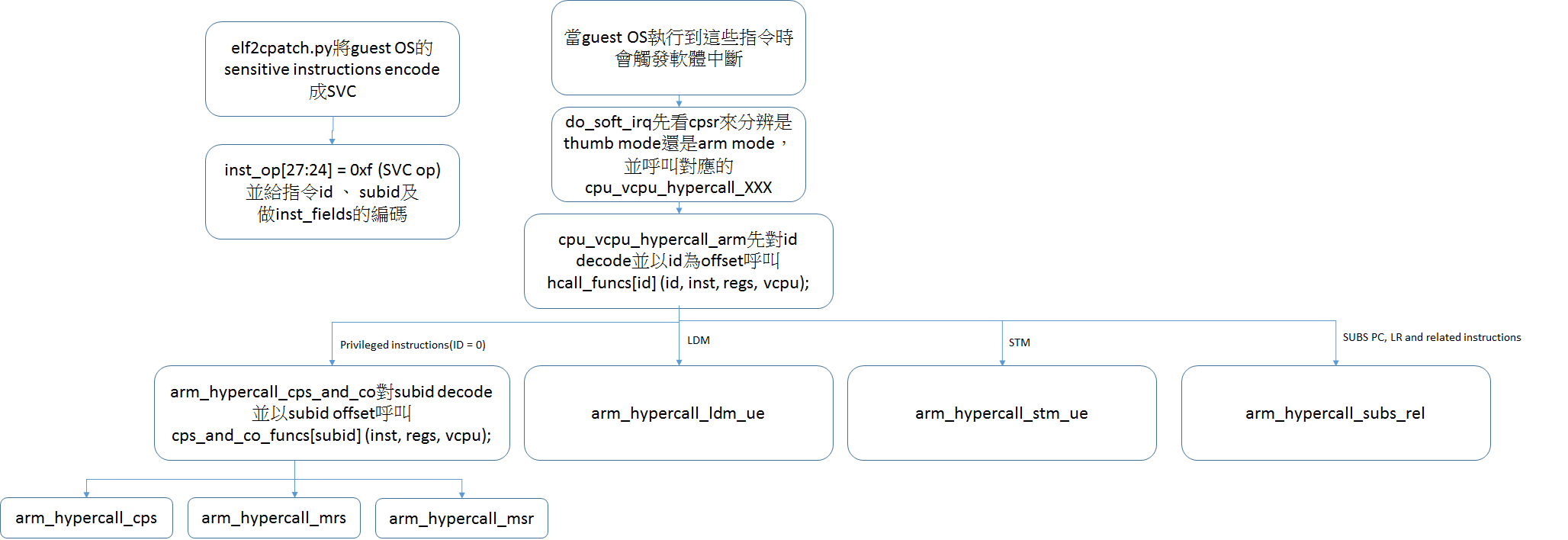

arm32 指令虛擬化

- elf2cpatch.py

- 將敏感的非特權指令編碼成SVC 指令,並給每一個被編碼的指令一個獨特的inst_id

- 以mrs為例

# MSR (immediate)

# Syntax:

# msr<c> <spec_reg>, #<const>

# Fields:

# cond = bits[31:28]

# R = bits[22:22]

# mask = bits[19:16]

# imm12 = bits[11:0]

# Hypercall Fields:

# inst_cond[31:28] = cond

# inst_op[27:24] = 0xf

# inst_id[23:20] = 0

# inst_subid[19:17] = 2

# inst_fields[16:13] = mask

# inst_fields[12:1] = imm12

# inst_fields[0:0] = R

def convert_msr_i_inst(hxstr):

hx = int(hxstr, 16)

inst_id = 0

inst_subid = 2

cond = (hx >> 28) & 0xF

R = (hx >> 22) & 0x1

mask = (hx >> 16) & 0xF

imm12 = (hx >> 0) & 0xFFF

rethx = 0x0F000000

rethx = rethx | (cond << 28)

rethx = rethx | (inst_id << 20)

rethx = rethx | (inst_subid << 17)

rethx = rethx | (mask << 13)

rethx = rethx | (imm12 << 1)

rethx = rethx | (R << 0)

return rethx- instruction emulation

cpu_entry.S中註冊Exception vector

_start_vect:

ldr pc, __reset

ldr pc, __undefined_instruction

ldr pc, __software_interrupt /* __software_interrupt: .word _soft_irq */

/* EXCEPTION_HANDLER _soft_irq, 4

*PUSH_REGS

*CALL_EXCEPTION_CFUNC do_soft_irq

*PULL_REGS

*/

...... void do_soft_irq(arch_regs_t *regs)

{

........

/* If vcpu priviledge is user then generate exception

* and return without emulating instruction

*/

if ((arm_priv(vcpu)->cpsr & CPSR_MODE_MASK) == CPSR_MODE_USER) {

vmm_vcpu_irq_assert(vcpu, CPU_SOFT_IRQ, 0x0);

} else {

if (regs->cpsr & CPSR_THUMB_ENABLED) {

rc = cpu_vcpu_hypercall_thumb(vcpu, regs,

*((u32 *)regs->pc));

} else {

rc = cpu_vcpu_hypercall_arm(vcpu, regs,

*((u32 *)regs->pc));

}

}

........

}以arm emulation為例, in cpu_vcpu_hypercall_arm.c:

int cpu_vcpu_hypercall_arm(struct vmm_vcpu *vcpu,

arch_regs_t *regs, u32 inst)

{

u32 id = ARM_INST_DECODE(inst,

ARM_INST_HYPERCALL_ID_MASK,

ARM_INST_HYPERCALL_ID_SHIFT);

return hcall_funcs[id](id, inst, regs, vcpu);

}將之前編碼後的指令解碼

static int (* const hcall_funcs[])(u32 id, u32 inst,

arch_regs_t *regs, struct vmm_vcpu *vcpu) = {

arm_hypercall_cps_and_co, /* ARM_HYPERCALL_CPS_ID */

arm_hypercall_ldm_ue, /* ARM_HYPERCALL_LDM_UE_ID0 */

arm_hypercall_ldm_ue, /* ARM_HYPERCALL_LDM_UE_ID1 */

arm_hypercall_ldm_ue, /* ARM_HYPERCALL_LDM_UE_ID2 */

.......

};若id 為 0,則要再做一次decode,呼叫模擬該指令的function

static int (* const cps_and_co_funcs[])(u32 inst,

arch_regs_t *regs, struct vmm_vcpu *vcpu) = {

arm_hypercall_cps, /* ARM_HYPERCALL_CPS_SUBID */

arm_hypercall_mrs, /* ARM_HYPERCALL_MRS_SUBID */

arm_hypercall_msr_i, /* ARM_HYPERCALL_MSR_I_SUBID */

arm_hypercall_msr_r, /* ARM_HYPERCALL_MSR_R_SUBID */

arm_hypercall_rfe, /* ARM_HYPERCALL_RFE_SUBID */

arm_hypercall_srs, /* ARM_HYPERCALL_SRS_SUBID */

arm_hypercall_wfx, /* ARM_HYPERCALL_WFI_SUBID */

arm_hypercall_smc /* ARM_HYPERCALL_SMC_SUBID */

};以mrs為例:

/* Emulate 'mrs' hypercall */

static int arm_hypercall_mrs(u32 inst,

arch_regs_t *regs, struct vmm_vcpu *vcpu)

{

register u32 Rd;

Rd = ARM_INST_BITS(inst,

ARM_HYPERCALL_MRS_RD_END,

ARM_HYPERCALL_MRS_RD_START);

if (Rd == 15) {

arm_unpredictable(regs, vcpu, inst, __func__);

return VMM_EFAIL;

}

if (ARM_INST_BIT(inst, ARM_HYPERCALL_MRS_R_START)) {

cpu_vcpu_reg_write(vcpu, regs, Rd,

cpu_vcpu_spsr_retrieve(vcpu));

} else {

cpu_vcpu_reg_write(vcpu, regs, Rd,

cpu_vcpu_cpsr_retrieve(vcpu, regs));

}

regs->pc += 4;

return VMM_OK;

}

with virtualization extension

armv7ve

- 有安全性擴展、LPAE及虛擬化擴展

- Cortex-A15 / Cortex-A7 (with big-LITTLE support)

- 原理與上方xvisor-instruction-emulate

相同,只是需要透過mrc來讀取hsr的內容。

- mrc p15, 4, %0, c5, c2, 0

armv8

設計文件整理

原文: https://github.com/xvisor/xvisor/blob/master/docs/DesignDoc

Chapter1: Modeling Virtual Machine

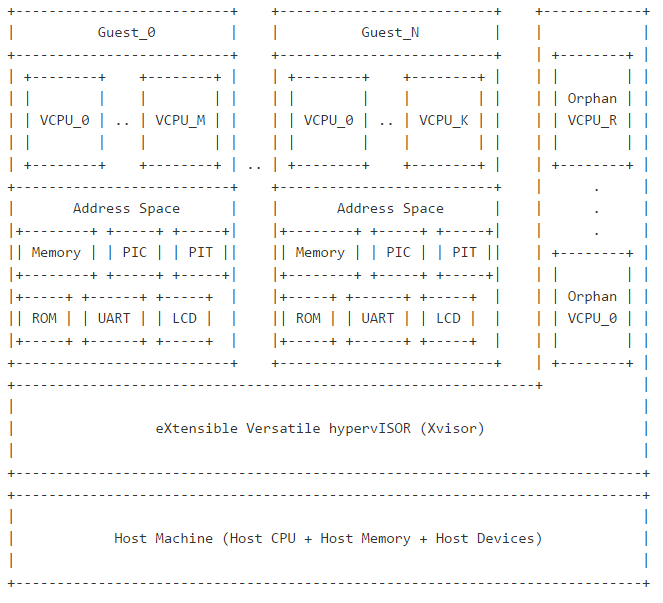

- 何謂VM(virtual machine),通常分為兩種

- system virtual machine: support the execution of a complete OS

- process virtual machine: support a single process

- Xvisor為硬體系統的虛擬化軟體,可直接運行於主機機器,為Native/Type-1的Hypervisor/VMM

system virtual machine通常稱為guest,而guest裏的CPU稱為VCPU(Virtual

CPU),VCPU又可分為兩種

- 屬於Guest的稱為 Normal VCPU

- 不屬於任何Guest的稱為 Orphan VCPU (Orphan VCPU是為了不同的背景程序及運行中的管理daemon而建立的)

- 當今CPU至少有兩種privilege mode:

- User mode為最低特權,運行Normal VCPUs

- Supervisor mode為最高特權,運行Orphan VCPUs

- Xvisor當執行various background process和執行management daemons使用Orphan VCPUs

下圖為Xvisor的System Virtual Machine Model

Chapter2: Hypervisor

Configuration ——————————————–

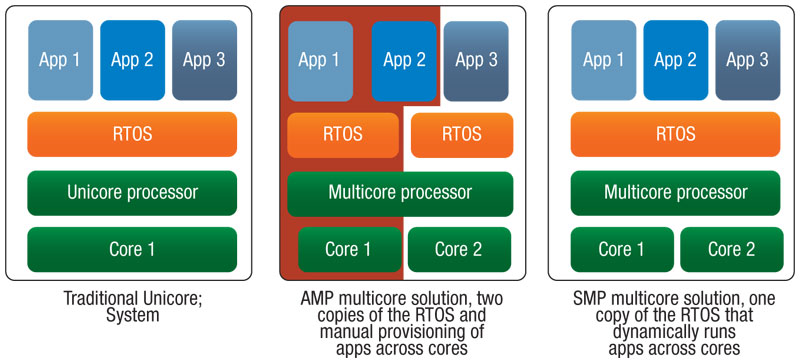

在早期,因為只有單一CPU執行OS,所以當我們要對一個系統設置OS是相當簡單的,但今日我們有強大的單一核心及多核心的處理器可針對許多的應用下作配置

一個多核心處理器可被單一對稱多重處理(symmetric multiprocessing

SMP)作業系統管理,每一個核心在非對稱多重處理(asymmetric multiprocessing

AMP)的處理下可被指派給不同的OS,每一個核心都當成是獨立的處理器,Process可以在不同的處理器移動,達到平衡,使系統效率提升

Chapter2: Hypervisor

Configuration ——————————————–

在早期,因為只有單一CPU執行OS,所以當我們要對一個系統設置OS是相當簡單的,但今日我們有強大的單一核心及多核心的處理器可針對許多的應用下作配置

一個多核心處理器可被單一對稱多重處理(symmetric multiprocessing

SMP)作業系統管理,每一個核心在非對稱多重處理(asymmetric multiprocessing

AMP)的處理下可被指派給不同的OS,每一個核心都當成是獨立的處理器,Process可以在不同的處理器移動,達到平衡,使系統效率提升

下圖為傳統單核心 vs AMP vs SMP 運作方式:

(圖片來源:

http://www.rtcmagazine.com/articles/view/101663)

(圖片來源:

http://www.rtcmagazine.com/articles/view/101663)

- SMP及AMP面臨的挑戰:

- SMP依照workload時常不適合擴充(scale),因為使用多個bus或crossbar switch,使得功耗過高

- AMP則很難去配置哪一個OS去存取哪一個device,作業系統認為他們對他們偵測到的硬體有完整的掌控權,所以這常常讓AMP有所牴觸Xvisor

另外: vSMP(virtual symmetric multiprocessing):多擁有一個「協同處理核心」co-processor,通常為低功耗製程,所以較常處理低頻率的運作,另外,此核心對OS來說如同不存在,也就是說OS和應用程式都不知道核心的存在,卻會自動地運用這個核心,所以我們不需要撰寫新的程式碼並透過軟體去控制,而vSMP主要是把兩個以上的virtual processor map到單一虛擬機,這讓我們能指派多個虛擬處理器到一個擁有至少兩個邏輯處理器的虛擬機上,優點為可以達到快取記憶體一致性,使作業系統效率高,功耗最佳化

Xvisor提供技術去劃分或虛擬化處理器核心、記憶體和在多個OS使用的device

- 利用稱為device tree的樹結構,去簡化運行在單核心或多核心系統的配置,因此系統設計者可以輕易地混合運用許多不同的AMP, SMP, 核心虛擬化配置去建立系統

- 在Linux之中,如果為of_platform架構(e.g. PowerPC),啟動的時候,Linux Kernel會等由boot loader產生的DTB file(Device Tree Blob/Flattened device tree),DTB file為經過DTC(Device Tree Compiler)編譯的DTS所產生的binary file,而of_platform只probe那些在DTB file提到的drivers,這些drivers相容或符合在device tree的devices.

- Xvisor不需和Linux of_platform一樣必須從DTB去設定device tree,Xvisor的device tree的來源可以很多種,不僅可從DTB去獲取,也可從ACPI table (Advanced Configuration and Power Interface),簡單來說,Xvisor的Device Tree只是個管理hypervisor設定的資料結構,Xvisor預設上都會支持利用DTS設置device tree,也包含Linux kernel source code的DTC及輕量級的DTB parsing library(libfdt)去設置device tree

以下為Xvisor device tree的規則限制,以便更新或設置

- Node Name: It must have characters from one of the following only,

- digit: [0-9]

- lowercase letter: [a-z]

- uppercase letter: [A-Z]

- underscore: _

- dash: -

- Attribute Name: It must have characters from one of the following

only,

- digit: [0-9]

- lowercase letter: [a-z]

- uppercase letter: [A-Z]

- underscore: _

- dash: -

- hash: #

- Node Name: It must have characters from one of the following only,

Attribute String Value: A string attribute value must end with NULL character (i.e. ‘\0’ or character value 0). For a string list, each string must be separated by exactly one NULL character.

Attribute 32-bit unsigned Value: A 32-bit integer value must be represented in big-endian format or little-endian format based on the endianess of host CPU architecture.

Attribute 64-bit unsigned Value: A 64-bit integer value must be represented in big-endian format or little-endian format based on the endianess of host CPU architecture.

- Note: Architecture specific code must ensure that the above constraints are satisfied while populating device tree)

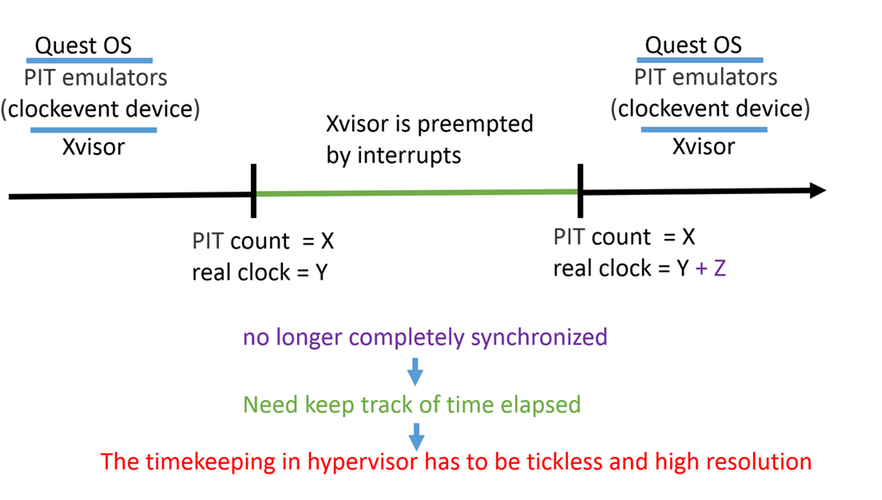

- Note: For standard attributes used by Xvisor refer source code.) Chapter3: Hypervisor Timer ——————————————–

就像許多作業系統一樣,hypervisor需要用一個timekeeping subsystem去追蹤經過的時間,我們稱Xvisor的timekeeping subsystem為hypervisor timer

- OS的timekeeping subsystem作以下兩件重要的事情:

- 1.追蹤經過的時間:最簡易的方法去實現此事為去數週期性的中斷,但是這方法非常不準確,更精確的作法是使用clocksource device(i.e.free running cycle accurate hardware counter)來當作時間參考

- 2.排程接下來的event:OS會讓CPU依據每一個事件的到期時間做一個list,最早到期的會先被執行Timekeeping對於hypervisor來說是不容易達成的,最主要的原因為時間被host端和許多的guest端一起使用,guest interrupt的進入以及相關的時脈資源和realtime中間不完全同步,這會使得realtime的通道發生問題。

- OS的timekeeping subsystem作以下兩件重要的事情:

Timekeeping routine被用來追蹤週期性的中斷所需要的時間,但對於legacy guest OS卻有一個嚴重的問題會發生。這些中斷可能來自於PIT或RTC。但有可能host virtualization engine 無法以適當的比率傳送中斷,導致guest time有可能落後,在high interrupt rate (EX.1000Hz)這問題會更為明顯

- 因此有三個解決方法被提出

- 如果guest有自己的time source使得我們不需去調整’wall clock’ or ’real time’時,這問題就可以被忽略

- 如果這樣不夠我們還會需要對guest加入額外的interrupts使得interrupt rate被提升,但是只有在host load或guest lag非常多以至於無法補償的情況才會使用這個方法

- guest端必須主動去意識到lost ticks並且在內部進行補償,但這通常只有在理論上可行,實作上會有許多問題需要克服

- 因此有三個解決方法被提出

結論: 在Hypervisor裡,timekeeping必須是tickless和high resolution,此外PIT emulators必須要keep backlogs of pending periodic interrupts

由於Xvisor timer 是從Linux 的hrtimer subsystems演變而來所以會有下列幾點特色

- 64-bit Timestamp : Timestamp代表當Xvispr被boot後時間為nanosecond等級

- Timer events : 可以利用expiry call back handler以及相關的expiry time來創造或消滅一個timer event

- Timer event當到期便會自動終止

- 為了要獲得週期性的timer event我們必須手動地重新啟動從它的expiry call back handler

Hypervisor為了要達到上述幾點必須能夠提供下列兩個條件

- one global clocksource device for each host CPU

- one clockevent device for each host CPU

下圖解釋tick distortion  (圖片來源:https://arm4fun.hackpad.com/Xvisor-ARMv8-Timer-BVc6RIuDGcD)

(圖片來源:https://arm4fun.hackpad.com/Xvisor-ARMv8-Timer-BVc6RIuDGcD)

Chapter4: Hypervisor Manager

Hypervisor Manager 創造和管理 VCPU & Guest instances,也同時提供觸發VCPU state changes的例行工作同時也會在 VCPU state改變時告知hypervisor scheduler。

就像一般的OS,Xvisor裡的VCPU可以分成兩個部分architecture dependent part和architecture independent part,以下便列出兩個部份的細節:

- The architecture dependent part of VCPU context consist of:

- Arch Registers:此register只有在user mode才會被processor更新,這些通常都是general purpose registers而且state flag會自動的被processor更新。我們將每一個VCPU裡的arch registers稱為 arch_regs_t *regs

- Arch Private:此registers只有在supervisor mode才會被processor更新,當Normal CPU想要讀/寫此種registers時會我們會得到exception並且回傳/更新一個virtual value,在大多數的情況arch private還是有許多額外的資料結構(EX.MMU context,shadow TLB,shadow page table…等等。)我們將每一個VCPU裡的arch private稱為 void *arch_priv

- The architecture independent part of VCPU context consist of:

- ID: 全域中唯一的ID (Globally unique identification number)

- SUBID: parent Guest中唯一的ID.(Only for Normal VCPUs) (Identification number unique within parent Guest.)

- Name: 這個VCPU的名稱. (Only for Orphan VCPUs) (The name given for this VCPU.)

- Device Tree Node: 指向VCPU 的 device tree 節點. (Only forNormal VCPUs) (Pointer to VCPU device tree node.)

- Is Normal: 顯示此VCPU 是 Normal 或是 Orphan的旗標. (Flag showing whether this VCPU is Normal or Orphan.)

- Guest: 指向 parent Guest. (Only for Normal VCPUs) (Pointer to parent Guest.)

- State: VCPU的狀態. (Explained below.) (Current VCPU state. )

- Reset Count: 此VCPU曾被reset的次數. (Number of times the VCPU has been resetted.)

- Start PC: PC的起始值. (Starting value of Program Counter.)

- Start SP: SP的起始值. (Only for Orphan VCPUs) (Starting value of Stack Pointer.)

- Virtual IRQ Info.: VCPU 虛擬中斷的管理資訊. ( Management information for VCPU Virtual interrupts.)

- Scheduling Info.: 排程器所需的管理資訊 (e.g. priority, prempt_count, time_slice, etc) (Managment information required by scheduler )

- Waitqueue Info.: waitqueue 所需的資訊 (Information required by waitqueues.)

- Device Emulation Context: Pointer to private information required by device emulation framework per VCPU.

VCPU在任何時間只會有一個state,下列為可能state的簡單描述:

- UNKNOWN: VCPU不屬於任何Guest或Orphan VCPU,為了使用較少記憶體,在這個state預先配置了最大數量的VCPUs

- RESET: VCPU被初始化並且等待變為READY,VCPU scheduler在創造一個新的VCPU之前會先變成UNKNOWN並初始化,之後再將新創造的VCPU轉為RESET state

- READY: VCPU已經準備就緒在硬體上執行

- RUNNING: VCPU正在硬體上執行

- PAUSED: VCPU被暫停,當VCPU有idle的情況發生會被設為PAUSED

- HALTED :VCPU被停止,當VCPU被不當執行會被設為HALTED

一個VCPU很有可能因為許多情況改變state例如architecture specific code, some hypervisor thread, scheduler, some emulated device….等等,不可能將其一一列出,但Hypervisor manager必須保證VCPU state的change遵從finite state machine的原則 下圖為finite-state machine VCPU for VCPU state change:

machine VCPU for VCPU state

change.png

VCPU的virtual interrupts的個數以及他們的優先權是由architecture specific code提供。 VCPU的virtual interrupt的插入和移除則是由architecture independent code觸發

一個Guest instance包含下列項目:

- ID:全域唯一識別碼( Globally unique identification number.)

- Device Tree Node: 指向device tree node的指標(Pointer to Guest device tree node.)

- VCPU Count: 屬於Guest的VCPU個數(Number of VCPU intances belonging to this Guest.)

- VCPU List:屬於Guest的VCPU清單( List of VCPU instances belonging to this Guest.)

- Guest Address Space Info: Information required for managing Guest physical address space.

- Arch Private: Architecture dependent context of this Guest.

一個Guest Address Space屬於architecture independent,包含下列項目:

- Device Tree Node: Pointer to Guest Address Space device tree node

- Guest: Pointer to Guest to which this Guest Address Space belongs.

- Region List: A set of “Guest Regions”

- Device Emulation Context: Pointer to private information required by device emulation framework per Guest Address Space.

每一個Guest Region都有一個獨特的Physical Address和Physical size,而一個Guest Region又有下列三種形式:

- Real Guest Region: give direct access to host machine/device(e.g. RAM, UART, etc),直接地將guest physical address map至host physical address

- Virtual Guest Region: give access to emulated device(e.g. emulated PIC, emulated Timer, etc),此region通常和emulated device是連接在一起,architecture specific code 負責redirecting virtual guest region read/write access to the Xvisor device emulation framework.

- Aliased Guest Region: gives access to another Guest Region at an alternate Guest Physical Address

Chapter5: Hypervisor Scheduler

Xvisor 的 Hypervisor排程是通用且pluggable的,他會在hypervisor manager 取得VCPU狀態改變的通知時更新per-CPU ready queue. Hypervisor scheduler使用per-CPU hypervisor 計時器事件(timer event)來分配時間間隔(time slice) 給VCPU。當一個CPU的排程計時器事件到期時,排程器會使用一些排程演算法來尋找下一個VCPU,並為他設定排程計時器事件。

對Xvisor來說,Normal VCPU是一個黑盒子,只有透過exception 或 interrupt才能取回控制權。當執行xvisor時,會有以下幾種context:

- IRQ Context: 當一些host端外部裝置發生中斷時

- Normal context: 當在模擬一些Normal VCPU的功能、指令或是IO時

- Orphan context: 當在執行部分Xvisor code 用於Orphan VCPU 或 thread 時

使用處理器架構特定的exception or interrupt handlers的幫助,排程器持續追蹤現在執行的context VCPU context 交換預期會有以下high-level steps :

- 把arch registers從stack 搬到current VCPU arch registers

- Restore arch register (or arch_regs_t) of next VCPU on stack

- 交換特定CPU資源的context,如 MMU, Floating point subsystem等

預期會有以下幾種情況發生:

- VCPU premption: 當分配給current VCPU的time slice 到期時,呼叫VCPU context switch

- 當Normal VCPU有錯誤操作時(進入非法記憶體位址等)時,使用hypervisor manager API來停止/暫停此VCPU

- Orphan VCPU 自願暫停(例如睡眠)

- Orphan VCPU 自願發放time slice

- VCPU state 可由其他VCPU透過hypervisor manager API 來改變

可以在 Xvisor menuconfig選項中選擇一些排程方法。任一種方法都會從per-CPU取得以下資訊:

- 優先權: 每個VCPU的優先權,數值越高有越高的優先權

- time slice: VCPU被排成時必須取得的最小時間

- Prempt Count: 這個VCPU保有的lock數。當Prempt Count > 0時就不能prempt

- Private Pointer

現在提供以下排程方法:

- Round-Robin (RR)

- Priority Based Round-Robin (PRR)

Xvisor預設使用PRR

Chapter7: Hypervisor Threads

在Xvisor裡,管理背景threads的threading framework稱為Hypervisor Threads。Threads其實是Orphan VCPUs的包裝。

若要在Xvisor裡建立一個thread,我們會需要5個必要的東西:

- Nmae:thread的名字

- Function:thread的起始function之pointer(entry function pointer)

- Data:void pointer,指向會被傳至entry function pointer之arguments的資料

- Priority:thread的優先權,數值愈高愈優先執行。

- Time Slice:一旦thread被排進排成後,所分配到的最小時間。

我們不需要詳細地替每個thread建立stack,hyprevisor threads framework會在每個thread被創造出來時自動建立個別的、固定大小的stack。而這個指定的stack大小可以在compile-time用Xvisor menuconfig options來修改。

Thread的ID, Priority, 和Time Slice會和在其下的Orphan VCPU的ID, Priority, Time Slice相同。

Thread有下列幾種state:

- CREATED:剛建立還沒開始跑

- RUNNING:跑ing

- SLEEPING:在waitqueue裡睡覺

- STOPPED:停止。可能執行完成或被強制終止

Orphan VCPU state可以被直接設成上列其中一種,所以想知道目前的thread之state的話,可以看其下的Orphan VCPU state。

測試

- 註冊ARM帳號

- 下載ARM Foundation v8 model

- 解壓縮到workspace

- 安裝toolchain

sudo apt-get install gcc-aarch64-linux-gnu

sudo apt-get install genext2fs- 下載及編譯Xvisor

cd workspace

git clone https://github.com/avpatel/xvisor-next.git && cd xvisor-next

export CROSS_COMPILE=aarch64-linux-gnu-

make ARCH=arm generic-v8-defconfig

make

make dtbs- 準備guest OS

make -C tests/arm64/virt-v8/basic

mkdir -p ./build/disk/images/arm64/virt-v8

./build/tools/dtc/dtc -I dts -O dtb -o ./build/disk/images/arm64/virt-v8x2.dtb ./tests/arm64/virt-v8/virt-v8x2.dts

cp -f ./build/tests/arm64/virt-v8/basic/firmware.bin ./build/disk/images/arm64/virt-v8/firmware.bin

cp -f ./tests/arm64/virt-v8/basic/nor_flash.list ./build/disk/images/arm64/virt-v8/nor_flash.list

genext2fs -B 1024 -b 16384 -d ./build/disk ./build/disk.img

./tools/scripts/memimg.py -a 0x80010000 -o ./build/foundation_v8_boot.img ./build/vmm.bin@0x80010000 ./build disk.img@0x81000000

aarch64-linux-gnu-gcc -nostdlib -nostdinc -e _start -Wl,-Ttext=0x80000000 -DGENTIMER_FREQ=100000000 -DUART_BASE=0x1c090000 -DGIC_DIST_BASE=0x2c001000 -DGIC_CPU_BASE=0x2c002000 -DSPIN_LOOP_ADDR=0x84000000 -DIMAGE=./build/foundation_v8_boot.img -DDTB=./build/arch/arm/board/generic/dts/foundation-v8/one_guest_virt-v8.dtb ./docs/arm/foundation_v8_boot.S -o ./build/foundation_v8_boot.axf- 測試

cd tests/arm64/virt-v8/basic

tclsh armv8_test.tcl ~/workspace/Foundation_Platformpkg/models/Linux64_GCC-4.1/Foundation_Platform(需先安裝tclsh,後面路徑假設Foundation v8 model解壓縮在workspace)

reference

- Xvisor: embedded and lightweight hypervisor

- https://github.com/xvisor/xvisor/blob/master/docs/DesignDoc

- https://samlin.hackpad.com/Xvisor–chtGSqPYWG8

- Linux on ARM 64-bit Architecture

- ARMv8-A_Architecture_Reference_Manual_(Issue_A.a) (需登入)

- A Virtualization Infrastructure that Supports Pervasive Computing.

- A Choices Hypervisor on the ARM architecture

- Extensions to the ARMv7-A architecture

- 前瞻 資訊科技 - 虛擬化 (1) - Virtualization( V12N ). 薛智文 教授

- 前瞻 資訊科技 - 虛擬化 (2) - Virtualization( V12N ). 薛智文 教授

- An Overview of Microkernel, Hypervisor and Microvisor Virtualization Approaches for Embedded Systems, Asif Iqbal, Nayeema Sadeque and Rafika Ida Mutia, Lund University, Sweden

- Hardware accelerated Virtualization in the ARM Cortex™ Processors

- Popek and Goldberg virtualization requirements

- Syllabus for CS5410 Virtualization Techniques (國立清華大學) (內有課程簡報)

- GICv2 Architecture Specification

- New Feature: VirtIO based paravirtualization

- linux kernel的中断子系统之(七):GIC代码分析

- Virtio: An I/O virtualization framework for Linux